mamba心脏疾病诊断

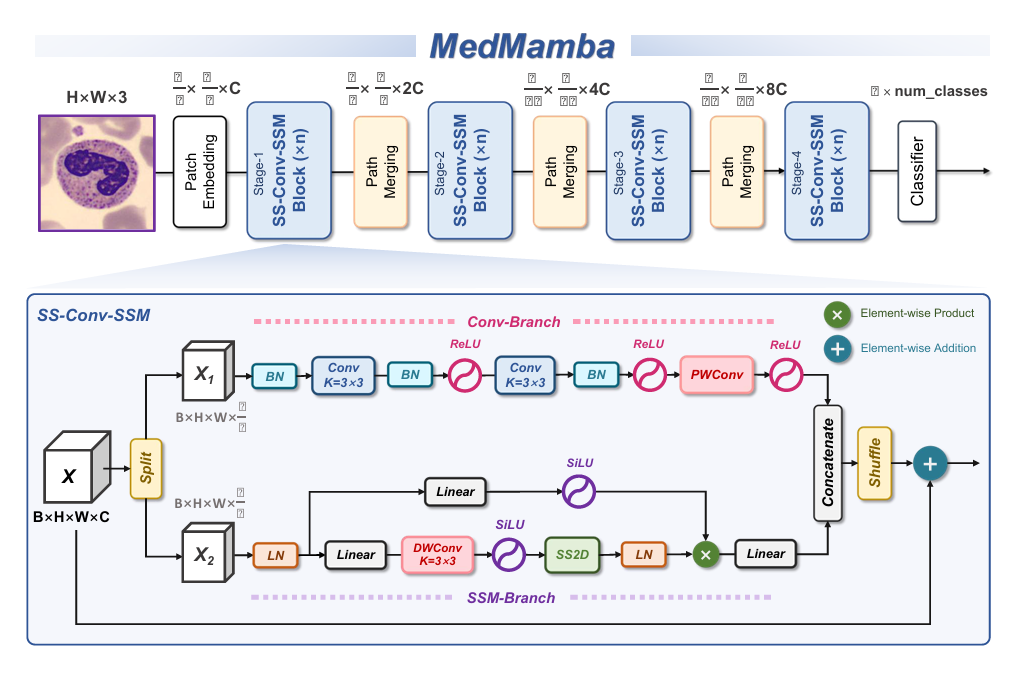

1. medmamba复现

想首先尝试一下medmamba的实验复现,用做后续的基础架构

是二维的,不好改,还是改改之前的分割网络看看

2. echo-mi实验

2.1 idea

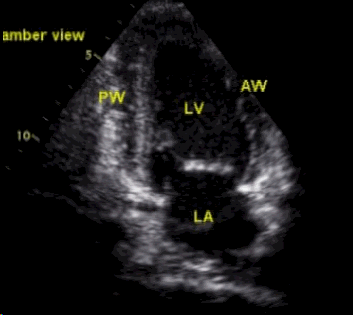

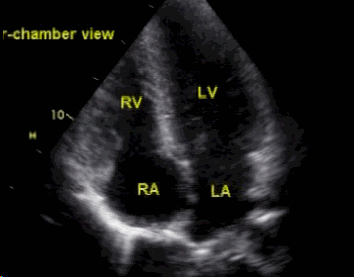

使用双切面(二腔室+四腔室)超声心动图数据训练Mamba模型诊断早期心梗的需求,设计了一个创新性的双路径时空融合Mamba架构(Dual-Path Spatiotemporal Fusion Mamba, DPSF-Mamba)。该架构针对性解决多切面心脏超声的时空特征融合问题,核心思路如下:

一、核心改造思路

双路径异构特征提取

独立编码路径:为二腔室(2C)和四腔室(4C)切面分别设计专用Mamba块,适应不同视角的局部结构特征。

2C路径:聚焦左心室前壁、心尖部运动异常(早期心梗敏感区域)。

4C路径:捕获室间隔、侧壁运动及整体心室协调性(诊断关键指标)。

动态门控融合模块(DGFM):引入可学习的门控权重,自适应融合双路径特征(公式示例):

其中 为可训练权重, 为Sigmoid函数,实现特征重要性动态分配。

多任务联合优化分类头

//todo

二、创新技术优势

| 模块 | 传统Mamba局限 | DPSF-Mamba改进 | 临床价值 |

|---|---|---|---|

| 多切面处理 | 单一路径忽视视角差异 | 双路径异构编码 + 动态融合 | 减少视角偏差,提升小病灶敏感性 |

| 时空特征利用 | 长序列建模但空间关联弱 | 时空切片重组 + 坐标注意力 | 同步捕捉运动异常与结构变形 |

| 数据效率 | 需大量标注数据 | 多任务学习共享特征 | 缓解超声标注稀缺问题 |

| 可解释性 | 黑盒决策 | 门控权重可视化切面贡献度 | 辅助医生理解AI诊断依据 |

三、预期效果验证方案

可解释性分析

绘制门控权重热力图(如下示例),验证模型对病变切面的关注度:

前壁梗死患者:4C切面权重峰值达0.83(主导诊断)

心尖梗死患者:2C切面权重升至0.79

四、潜在挑战与解决方案

挑战1:双切面数据不全(如部分患者缺失一个切面)

方案:引入跨切面知识蒸馏,用完整数据训练教师网络指导单切面学生网络。

挑战2:超声伪影干扰

方案:在Mamba前端加入对抗去噪模块(如Conditional GAN)。

2.2 faec_advance实验

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| faec | 0.71875 | 0.7013 | 0.3077 | 1.0 | 0.1818 | 1.0 |

camus 多切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_6 fold_1 | 0.8125 | 0.858333 | 0.769230 | 0.714286 | 0.833333 | 0.8 |

| Experiment_6 fold_2 | 0.75 | 0.741666 | 0.555555 | 0.833333 | 0.416666 | 0.949999 |

| Experiment_6 fold_3 | 0.8125 | 0.899999 | 0.699999 | 0.875 | 0.583333 | 0.949999 |

| Experiment_6 fold_4 | 0.78125 | 0.852814 | 0.588235 | 0.833333 | 0.454545 | 0.952380 |

| Experiment_6 fold_5 | 0.71875 | 0.701298 | 0.307692 | 1.0 | 0.1818 | 1.0 |

| Experiment_6 avg | 0.775 | 0.8108 | 0.5841 | 0.8512 | 0.4939 | 0.9305 |

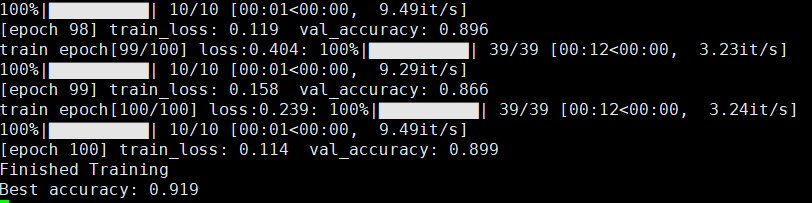

3. MIMamba实验

改造之前的分割网络的mamba用于分类

3.1 camus数据集

camus A2C的实验

| name | acc | auc | f1 | precision | recall |

|---|---|---|---|---|---|

| Experiment_4 fold_1 | 0.375 | 0.533333 | 0.545454 | 0.375 | 1.0 |

| Experiment_4 fold_2 | 0.34375 | 0.558441 | 0.511628 | 0.34375 | 1.0 |

| Experiment_5 fold_1 | 0.75 | 0.699999 | 0.636364 | 0.699999 | 0.583333 |

| Experiment_5 fold_2 | 0.71875 | 0.670995 | 0.571428 | 0.6 | 0.545454 |

| Experiment_5 fold_3 | 0.71875 | 0.636363 | 0.470588 | 0.571428 | 0.4 |

| Experiment_5 fold_4 | 0.71875 | 0.590909 | 0.470588 | 0.571428 | 0.4 |

| Experiment_5 fold_5 | 0.625 | 0.490909 | 0.4 | 0.4 | 0.4 |

| Experiment_5 avg | 0.70625 | 0.617835 | 0.509794 | 0.568571 | 0.425757 |

camus 多切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_7 fold_1 | 0.84375 | 0.837499 | 0.761904 | 0.888888 | 0.666666 | 0.949999 |

| Experiment_7 fold_2 | 0.75 | 0.708333 | 0.555555 | 0.833333 | 0.416666 | 0.949999 |

| Experiment_7 fold_3 | 0.8125 | 0.8375 | 0.727272 | 0.8 | 0.666666 | 0.899999 |

| Experiment_7 fold_4 | 0.8125 | 0.818181 | 0.75 | 0.692307 | 0.818181 | 0.809523 |

| Experiment_7 fold_5 | 0.71875 | 0.714285 | 0.307692 | 1.0 | 0.181818 | 1.0 |

| Experiment_7 (监控acc) | 0.7875 | 0.7832 | 0.6205 | 0.8429 | 0.5500 | 0.9219 |

| Experiment_8 fold_1 | 0.65625 | 0.875 | 0.266666 | 0.666666 | 0.166666 | 0.949999 |

| Experiment_8 fold_2 | 0.75 | 0.816666 | 0.666666 | 0.666666 | 0.666666 | 0.8 |

| Experiment_8 fold_3 | 0.71875 | 0.858333 | 0.571428 | 0.666666 | 0.5 | 0.85 |

| Experiment_8 fold_4 | 0.65625 | 0.8658 | 0.0 | 0 | 0 | 1 |

| Experiment_8 fold_5 | 0.6875 | 0.796536 | 0.583333 | 0.538461 | 0.636363 | 0.714285 |

| Experiment_8 (监控auc) | ||||||

| Experiment_9 fold_1 | 0.75 | 0.879166 | 0.5 | 1.0 | 0.333333 | 1.0 |

| Experiment_9 fold_2 | 0.65625 | 0.695833 | 0.153846 | 1.0 | 0.083333 | 1.0 |

| Experiment_9 fold_3 | 0.65625 | 0.770833 | 0.153846 | 1.0 | 0.083333 | 1.0 |

| Experiment_9 fold_4 | 0.6875 | 0.649350 | 0.166666 | 1.0 | 0.090909 | 1.0 |

| Experiment_9 fold_5 | 0.75 | 0.761904 | 0.5 | 0.8 | 0.363636 | 0.952380 |

| Experiment_9 (precision) | ||||||

| Experiment_10 fold_1 | 0.84375 | 0.875 | 0.814814 | 0.733333 | 0.916666 | 0.8 |

| Experiment_10 fold_2 | 0.78125 | 0.75 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

| Experiment_10 fold_3 | 0.84375 | 0.85 | 0.761904 | 0.888888 | 0.666666 | 0.949999 |

| Experiment_10 fold_4 | 0.75 | 0.779220 | 0.692307 | 0.6 | 0.818181 | 0.714285 |

| Experiment_10 fold_5 | 0.625 | 0.675324 | 0.625 | 0.476190 | 0.909090 | 0.476190 |

| Experiment_10 (监控f1) | 0.7688 | 0.7859 | 0.7179 | 0.6851 | 0.7955 | 0.7581 |

| Experiment_11 fold_1 | 0.90625 | 0.85 | 0.869565 | 0.909090 | 0.833333 | 0.949999 |

| Experiment_11 fold_2 | 0.75 | 0.754166 | 0.714285 | 0.625 | 0.833333 | 0.699999 |

| Experiment_11 fold_3 | 0.78125 | 0.820833 | 0.740740 | 0.666666 | 0.833333 | 0.75 |

| Experiment_11 fold_4 | 0.75 | 0.744588 | 0.714285 | 0.588235 | 0.909090 | 0.666666 |

| Experiment_11 fold_5 | 0.65625 | 0.679653 | 0.645161 | 0.5 | 0.909090 | 0.523809 |

| Experiment_best | 0.8063 | 0.8129 | 0.7321 | 0.7512 | 0.7242 | 0.8547 |

| Experiment_15 fold_1 | 0.875 | 0.870833 | 0.846153 | 0.785714 | 0.916666 | 0.85 |

| Experiment_15 fold_2 | 0.8125 | 0.762499 | 0.769230 | 0.714285 | 0.833333 | 0.8 |

| Experiment_15 fold_3 | 0.84375 | 0.854166 | 0.761904 | 0.888888 | 0.666666 | 0.949999 |

| Experiment_15 fold_4 | 0.75 | 0.709956 | 0.6 | 0.666666 | 0.545454 | 0.857142 |

| Experiment_15 fold_5 | 0.75 | 0.718614 | 0.692307 | 0.6 | 0.818181 | 0.714285 |

| Experiment_15 | ||||||

| Experiment_18 fold_1 | 0.78125 | 0.725 | 0.72 | 0.6923 | 0.75 | 0.8 |

| Experiment_23 fold_1 | 0.78125 | 0.7625 | 0.6666 | 0.7777 | 0.5833 | 0.8999 |

| Experiment_24 fold_1 | 0.8125 | 0.8166 | 0.6999 | 0.875 | 0.5833 | 0.949999 |

| Experiment_25 fold_1 | 0.84375 | 0.862499 | 0.761904 | 0.888888 | 0.666666 | 0.949999 |

使用adaw

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_28 fold_1 | 0.8125 | 0.791666 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_29 fold_1 | 0.8125 | 0.7958 | 0.75 | 0.75 | 0.75 | 0.85 |

camus mi_mamba A2C和A4C路径

1 | |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_26 fold_1 | 0.78125 | 0.766666 | 0.72 | 0.692307 | 0.75 | 0.8 |

换用 adaw

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_27 fold_1 | 0.8125 | 0.75 | 0.75 | 0.75 | 0.75 | 0.85 |

camus 多切面 MultiStageFusion

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_12 fold_1 | 0.8125 | 0.866666 | 0.699999 | 0.875 | 0.583333 | 0.949999 |

| Experiment_12 fold_2 | 0.75 | 0.720833 | 0.666666 | 0.666666 | 0.666666 | 0.8 |

| Experiment_12 fold_3 | 0.78125 | 0.75 | 0.631579 | 0.857142 | 0.5 | 0.949 |

| Experiment_13 fold_1 | 0.8125 | 0.754166 | 0.699999 | 0.875 | 0.583333 | 0.949999 |

| Experiment_14 fold_1 | 0.8125 | 0.891666 | 0.785714 | 0.6875 | 0.916666 | 0.75 |

camus 多尺度融合模型

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_17 fold_1 | 0.71875 | 0.695833 | 0.666666 | 0.6 | 0.75 | 0.699999 |

camus 分层融合模型mi_mamba_hierarchical_model

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_16 fold_1 | 0.78125 | 0.8208 | 0.7407 | 0.666666 | 0.833333 | 0.75 |

| Experiment_16 fold_2 | 0.6875 | 0.5208 | 0.375 | 0.75 | 0.25 | 0.9499 |

| Experiment_16 fold_3 | 0.75 | 0.8 | 0.6 | 0.75 | 0.5 | 0.8999 |

| Experiment_16 fold_4 | 0.75 | 0.6883 | 0.6 | 0.666666 | 0.545454 | 0.857142 |

| Experiment_16 fold_5 | 0.6875 | 0.580086 | 0.375 | 0.6 | 0.272727 | 0.904762 |

| Experiment_19 fold_1 | 0.75 | 0.758333 | 0.5555 | |||

| Experiment_20 fold_1 | 0.75 | 0.7333 | 0.6666 | 0.666666 | 0.666666 | 0.8 |

| Experiment_21 fold_1 | 0.78125 | 0.75 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

| Experiment_22 fold_1 | 0.75 | 0.6875 | 0.5555 | 0.8333 | 0.416666 | 0.9499 |

| 0.75 | 0.6958 | 0.6 | 0.75 | 0.5 | 0.899999 | |

| 换用adaw | ||||||

| Experiment_30 fold_1 | 0.78125 | 0.708333 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

cross-ssm

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_32 fold_1 | 0.625 | 0.475 | 0 | 0 | 0 | 1 |

| Experiment_33 fold_1 | 0.78125 | 0.783333 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

| Experiment_34 fold_1 gate | 0.75 | 0.754166 | 0.636363 | 0.699999 | 0.583333 | 0.85 |

| Experiment_35 fold_1 gate w | 0.8125 | 0.804166 | 0.727272 | 0.8 | 0.666666 | 0.899999 |

| Experiment_36 fold_1 attn w | 0.75 | 0.720833 | 0.6 | 0.75 | 0.5 | 0.899999 |

| Experiment_37 fold_1 gate attn w | 0.8125 | 0.825 | 0.727272 | 0.8 | 0.666666 | 0.899999 |

| Experiment_ fold_1 attn gate w | 0.75 | 0.774999 | 0.636363 | 0.699999 | 0.583333 | 0.85 |

从上面的来看cross-ssm效果还可以,继续使用cross-ssm 验证camus填充方式

cross-ssm gate attn w

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_39 fold_1 repeat | 0.78125 | 0.729166 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

| Experiment_40 fold_1 interpolate | 0.84375 | 0.845833 | 0.8 | 0.769230 | 0.833333 | 0.85 |

| Experiment_40 fold_1 random_repeat | 0.75 | 0.754166 | 0.666666 | 0.666666 | 0.666666 | 0.8 |

| Experiment_41 fold_1 cyclic | 0.8125 | 0.791666 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_42 fold_1 reflect | 0.71875 | 0.745833 | 0.666666 | 0.6 | 0.75 | 0.699999 |

| Experiment_43 fold_1 noise | 0.75 | 0.745833 | 0.692307 | 0.642857 | 0.75 | 0.75 |

| Experiment_44 fold_1 random | 0.8125 | 0.783333 | 0.727272 | 0.8 | 0.666666 | 0.899999 |

hidden size降低到256再试试

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_60 fold_1 repeat | 0.75 | 0.766666 | 0.636363 | 0.699999 | 0.583333 | 0.85 |

| Experiment_61 fold_1 interpolate | 0.78125 | 0.829166 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

| Experiment_62 fold_1 random_repeat | 0.8125 | 0.8083 | 0.7692 | 0.714285 | 0.833333 | 0.8 |

| Experiment_63 fold_1 cyclic | 0.78125 | 0.716666 | 0.72 | 0.6923 | 0.75 | 0.8 |

| Experiment_64 fold_1 reflect | 0.78125 | 0.795833 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

| Experiment_65 fold_1 noise | 0.8125 | 0.795833 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_66 fold_1 random | 0.78125 | 0.7875 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

cross-ssm

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_45 fold_1 | 0.84375 | 0.858333 | 0.761904 | 0.888888 | 0.666666 | 0.949999 |

| Experiment_45 fold_1 | 0.78125 | 0.8 | 0.72 | 0.692307 | 0.75 | 0.8 |

| Experiment_46 fold_1 | 0.71875 | 0.704166 | 0.666666 | 0.6 | 0.75 | 0.699999 |

| Experiment_47 fold_1 | 0.78125 | 0.758333 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

| Experiment_48 fold_1 b8 | 0.78125 | 0.779166 | 0.72 | 0.692307 | 0.75 | 0.8 |

| Experiment_49 fold_1 b16 | 0.78125 | 0.758333 | 0.740740 | 0.666666 | 0.833333 | 0.75 |

| 增加模型feat[32, 64, 128, 256] | ||||||

| Experiment_50 fold_1 b8 | 0.78125 | 0.741666 | 0.740740 | 0.666666 | 0.833333 | 0.75 |

| Experiment_51 fold_1 b8 | 0.78125 | 0.7875 | 0.758620 | 0.647058 | 0.916666 | 0.699999 |

| Experiment_52 fold_1 b8 | 0.75 | 0.804166 | 0.714285 | 0.625 | 0.833333 | 0.699999 |

| 改回了填充到32帧 | ||||||

| Experiment_53 fold_1 | 0.78125 | 0.779166 | 0.72 | 0.6923 | 0.75 | 0.8 |

| Experiment_54 fold_1 | 0.78125 | 0.7625 | 0.666666 | 0.7777 | 0.583333 | 0.899999 |

| 增大hidden size 512 | ||||||

| Experiment_56 fold_1 | 0.71875 | 0.666666 | 0.666666 | 0.6 | 0.75 | 0.699999 |

| Experiment_57 fold_1 | 0.78125 | 0.729166 | 0.666666 | 0.777777 | 0.583333 | 0.899999 |

| hidden size 256 | ||||||

| Experiment_58 fold_1 | 0.78125 | 0.754166 | 0.740740 | 0.666666 | 0.833333 | 0.75 |

| hidden size 768 | ||||||

| Experiment_59 fold_1 | 0.78125 | 0.7875 | 0.6315 | 0.8571 | 0.5 | 0.9499 |

前面的损失都是bce

损失

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_67 fold_1 focal | 0.6875 | 0.770833 | 0.545454 | 0.6 | 0.5 | 0.8 |

| Experiment_68 fold_1 dice | 0.75 | 0.779166 | 0.6 | 0.75 | 0.5 | 0.899999 |

| Experiment_69 fold_1 bce_dice | 0.75 | 0.729166 | 0.636363 | 0.699999 | 0.583333 | 0.85 |

| Experiment_70 fold_1 tversky | 0.875 | 0.829166 | 0.846153 | 0.785714 | 0.916666 | 0.85 |

| Experiment_71 fold_1 asymmetric | 0.78125 | 0.766666 | 0.72 | 0.6923 | 0.75 | 0.8 |

tversky 完整实验

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_70 fold_1 | 0.875 | 0.829166 | 0.846153 | 0.785714 | 0.916666 | 0.85 |

| Experiment_70 fold_2 | 0.75 | 0.675 | 0.666666 | 0.666666 | 0.666666 | 0.8 |

| Experiment_70 fold_3 | 0.71875 | 0.691666 | 0.526315 | 0.714285 | 0.416666 | 0.899999 |

| Experiment_70 fold_4 | 0.71875 | 0.718614 | 0.608695 | 0.583333 | 0.636363 | 0.761904 |

| Experiment_70 fold_5 | 0.5625 | 0.597402 | 0.533333 | 0.421052 | 0.727272 | 0.476190 |

还是改为16帧吧

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_74 fold_1 | 0.84375 | 0.770833 | 0.782608 | 0.818181 | 0.75 | 0.899999 |

| Experiment_74 fold_2 | 0.6875 | 0.645833 | 0.375 | 0.75 | 0.25 | 0.949999 |

| Experiment_74 fold_3 | 0.6875 | 0.6875 | 0.5454 | 0.6 | 0.5 | 0.8 |

| Experiment_74 fold_4 | 0.78125 | 0.744588 | 0.666666 | 0.699999 | 0.636363 | 0.857142 |

| Experiment_74 fold_5 | 0.6875 | 0.670995 | 0.583333 | 0.538461 | 0.636363 | 0.714285 |

bce损失

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_75 fold_1 | 0.8125 | 0.7666 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_75 fold_2 | 0.6875 | 0.637499 | 0.444444 | 0.666666 | 0.333333 | 0.899999 |

| Experiment_75 fold_3 | 0.6875 | 0.612499 | 0.583333 | 0.583333 | 0.583333 | 0.75 |

| Experiment_75 fold_4 | 0.75 | 0.766233 | 0.555555 | 0.714285 | 0.454545 | 0.904761 |

| Experiment_75 fold_5 | 0.59375 | 0.683982 | 0.580645 | 0.449999 | 0.818181 | 0.476190 |

去掉模块

bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_76 fold_1 | 0.8125 | 0.8292 | 0.7273 | 0.8 | 0.6667 | 0.8999 |

| Experiment_76 fold_2 | 0.75 | 0.7083 | 0.6923 | 0.6429 | 0.75 | 0.75 |

| Experiment_76 fold_3 | 0.75 | 0.7916 | 0.6364 | 0.6999 | 0.5833 | 0.85 |

| Experiment_76 fold_4 | 0.78125 | 0.7359 | 0.6666 | 0.6999 | 0.6363 | 0.8571 |

| Experiment_76 fold_5 | 0.4375 | 0.5757 | 0.5263 | 0.3703 | 0.9090 | 0.1904 |

只加上gated

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_77 fold_1 | 0.6875 | 0.7375 | 0.6666 | 0.5555 | 0.8333 | 0.6 |

| Experiment_77 fold_2 | 0.7187 | 0.6666 | 0.64 | 0.6153 | 0.6666 | 0.75 |

| Experiment_77 fold_3 | 0.5937 | 0.5916 | 0.6285 | 0.4782 | 0.9166 | 0.4 |

| Experiment_77 fold_4 | 0.5625 | 0.6147 | 0.5882 | 0.4347 | 0.9090 | 0.3809 |

| Experiment_77 fold_5 |

只加上attn

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_78 fold_1 | 0.75 | 0.7541 | 0.6666 | 0.6666 | 0.6666 | 0.8 |

| Experiment_78 fold_2 | 0.71875 | 0.6916 | 0.64 | 0.6153 | 0.6666 | 0.75 |

| Experiment_78 fold_3 | 0.625 | 0.6208 | 0.6 | 0.5 | 0.75 | 0.55 |

| Experiment_78 fold_4 | 0.7187 | 0.5930 | 0.5263 | 0.625 | 0.4545 | 0.8571 |

| Experiment_78 fold_5 |

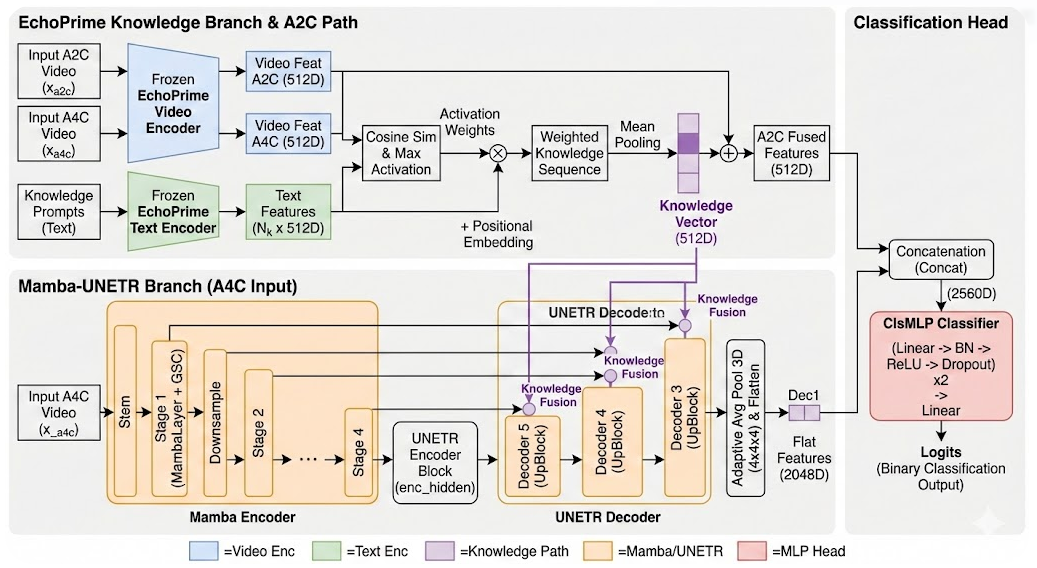

3.1.1 mamba+echoprime video

使用两个分支对两个切面的数据进行提取,echo_prime和mamba进行提取,最后一起送入分类器

mamba: A2C切面的数据

echo_prime: A4C切面的数据

融合: 通道拼接

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_79 fold_1 | 0.7813 | 0.7333 | 0.6667 | 0.7777 | 0.5833 | 0.90 |

| Experiment_79 fold_2 | 0.78125 | 0.774999 | 0.5882 | 1.0 | 0.4166 | 1.0 |

| Experiment_79 fold_3 | 0.875 | 0.9208 | 0.818181 | 0.899999 | 0.75 | 0.949999 |

| Experiment_79 fold_4 | 0.84375 | 0.839826 | 0.761904 | 0.8 | 0.727272 | 0.904761 |

| Experiment_79 fold_5 | 0.78125 | 0.757575 | 0.6666 | 0.699999 | 0.636363 | 0.857142 |

| Experiment_79 | 0.8125 |

保留2*2*2的空间结构

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_80 fold_1 | 0.78125 | 0.695833 | 0.666666 | 0.777777 | 0.5833 | 0.8999 |

| Experiment_80 fold_2 | 0.78125 | 0.787499 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

| Experiment_80 fold_3 | 0.875 | 0.8958 | 0.846153 | 0.785714 | 0.916666 | 0.85 |

| Experiment_80 fold_4 | 0.84375 | 0.839826 | 0.782608 | 0.75 | 0.818181 | 0.857142 |

| Experiment_80 fold_5 | 0.8125 | 0.787878 | 0.6666 | 0.8571 | 0.5454 | 0.9523 |

| Experiment_80 | 0.8188 | 0.8014 | 0.7315 | 0.7796 | 0.7060 | 0.8818 |

保留4*4*4的空间结构

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_81 fold_1 | 0.8125 | 0.875 | 0.6999 | 0.875 | 0.5833 | 0.9499 |

| Experiment_81 fold_2 | 0.8125 | 0.775 | 0.7272 | 0.8 | 0.666666 | 0.899999 |

| Experiment_81 fold_3 | 0.875 | 0.879166 | 0.8333 | 0.8333 | 0.8333 | 0.8999 |

| Experiment_81 fold_4 | 0.84375 | 0.848484 | 0.7826 | 0.75 | 0.818181 | 0.857142 |

| Experiment_81 fold_5 | 0.84375 | 0.7922 | 0.7619 | 0.8 | 0.727272 | 0.904761 |

| Experiment_80 | 0.8375 | 0.8139 | 0.7610 | 0.8117 | 0.7257 | 0.9023 |

交换特征提取器

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_82 fold_1 | 0.90625 | 0.879166 | 0.879999 | 0.846153 | 0.916666 | 0.899999 |

| Experiment_82 fold_2 | 0.78125 | 0.741666 | 0.695652 | 0.727272 | 0.666666 | 0.85 |

| Experiment_82 fold_3 | 0.8125 | 0.8666 | 0.7692 | 0.714285 | 0.8333 | 0.8 |

| Experiment_82 fold_4 | 0.90625 | 0.9220 | 0.842105 | 1.0 | 0.727272 | 1.0 |

| Experiment_82 fold_5 | 0.75 | 0.6666 | 0.5 | 0.8 | 0.363636 | 0.9523 |

| Experiment_82 | 0.8312 | 0.8152 | 0.7373 | 0.8175 | 0.6976 | 0.8805 |

学习率

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_83 fold_5 1e-4 | 0.71875 | 0.6320 | 0.3077 | 1.0 | 0.1818 | 1.0 |

| Experiment_84 fold_5 1e-6 | 0.78125 | 0.7445 | 0.5882 | 0.8333 | 0.454545 | 0.952380 |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_84 fold_1 | 0.9375 | 0.904166 | 0.916666 | 0.916666 | 0.916666 | 0.949999 |

| Experiment_84 fold_2 | 0.78125 | 0.699999 | 0.72 | 0.692307 | 0.75 | 0.8 |

| Experiment_84 fold_3 | 0.84375 | 0.816666 | 0.8 | 0.769230 | 0.833333 | 0.85 |

| Experiment_84 fold_4 | 0.90625 | 0.8961 | 0.8571 | 0.8999 | 0.818181 | 0.952380 |

| Experiment_84 fold_5 | 0.78125 | 0.7445 | 0.5882 | 0.8333 | 0.454545 | 0.952380 |

| Experiment_84 95% | 0.8500 | 0.8249 | 0.7857 | 0.8148 | 0.7586 | 0.9020 |

3.1.2 mamba+echoprime text video

在mamba的decoder部分加入三层知识向量

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构 损失bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_102 fold_1 | 0.9375 | 0.895833 | 0.916666 | 0.916666 | 0.916666 | 0.949999 |

| Experiment_102 fold_2 | 0.78125 | 0.7374 | 0.6666 | 0.7777 | 0.5833 | 0.8999 |

| Experiment_102 fold_3 | 0.78125 | |||||

| Experiment_102 fold_4 | 0.875 | |||||

| Experiment_102 fold_5 | 0.75 | |||||

| Experiment_102 | 0.816 |

在mamba的decoder部分加入知识向量和echo prime视频提取部分都加入知识向量

学习率1e-5

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_103 fold_1 | 0.90625 | 0.8791 | 0.8799 | 0.8461 | 0.9166 | 0.8999 |

| Experiment_103 fold_2 | 0.78125 | |||||

| Experiment_103 fold_3 | 0.8125 | |||||

| Experiment_103 fold_4 | 0.875 | 0.8744 | 0.8 | 0.8888 | 0.7272 | 0.9523 |

| Experiment_103 fold_5 | 0.75 | |||||

| Experiment_103 | 0.825 |

损失asymmetric 学习率1e-5

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_104 fold_1 | 0.90625 | |||||

| Experiment_104 fold_2 | 0.78125 | |||||

| Experiment_104 fold_5 | 0.75 |

学习率

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_105 fold_5 1e-6 | 0.71875 | |||||

| Experiment_106 fold_5 1e-5 | 0.75 | |||||

| Experiment_107 fold_5 1e-4 | 0.71875 | |||||

| Experiment_108 fold_5 3e-4 | 0.75 | |||||

| Experiment_109 fold_5 3e-5 | 0.6875 | |||||

| Experiment_110 fold_5 3e-6 | 0.71875 |

效果好差,还是只加一层知识向量吧

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_111 fold_5 1e-6 | 0.75 |

在A4C的mamba的瓶颈层加入知识向量,同时A2C的video encoder也加入知识向量

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_112 fold_1 | 0.9375 | 0.925 | 0.916666 | 0.916666 | 0.916666 | 0.9499 |

| Experiment_112 fold_2 | 0.78125 | 0.7374 | 0.6666 | 0.7777 | 0.5833 | 0.8999 |

| Experiment_112 fold_3 | 0.78125 | 0.7958 | 0.72 | 0.6923 | 0.75 | 0.8 |

| Experiment_112 fold_4 | 0.875 | 0.8701 | 0.8181 | 0.818181 | 0.818181 | 0.9047 |

| Experiment_112 fold_5 | ||||||

| Experiment_112 |

b16

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_113 fold_1 | 0.90625 | 0.9208 | 0.8799 | 0.8461 | 0.9166 | 0.8999 |

| Experiment_113 fold_2 | 0.78125 | 0.725 | 0.72 | 0.6923 | 0.75 | 0.8 |

| Experiment_113 fold_3 | 0.75 | 0.8125 | 0.7142 | 0.625 | 0.8333 | 0.6999 |

| Experiment_113 fold_4 | 0.90625 | 0.8658 | 0.8571 | 0.8999 | 0.8181 | 0.9523 |

| Experiment_113 fold_5 | 0.78125 | 0.6666 | 0.6666 | 0.6999 | 0.6363 | 0.8571 |

| Experiment_113 |

交换A2C和A4C 十分拉跨

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_114 fold_1 | 0.75 | 0.8458 | 0.7333 | 0.6111 | 0.9166 | 0.6499 |

| Experiment_114 fold_2 | 0.8125 | 0.875 | 0.7692 | 0.7142 | 0.8333 | 0.8 |

| Experiment_114 fold_3 | 0.78125 | 0.825 | 0.7586 | 0.6470 | 0.9166 | 0.6999 |

| Experiment_114 fold_4 | 0.6875 | 0.7748 | 0.6428 | 0.5294 | 0.8181 | 0.6190 |

| Experiment_114 fold_5 | 0.75 | 0.6926 | 0.6666 | 0.6153 | 0.7272 | 0.7619 |

在mamba的decoder部分加入知识向量和echo prime video encoder 加入知识向量

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构 损失bce

学习率1e-6 b8

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_115 fold_1 | 0.9375 | 0.9125 | 0.9166 | 0.9166 | 0.9166 | 0.9499 |

| Experiment_115 fold_2 | 0.8125 | 0.7333 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_115 fold_3 | 0.8125 | 0.8041 | 0.7272 | 0.8 | 0.6666 | 0.8999 |

| Experiment_115 fold_4 | 0.90625 | 0.9004 | 0.8571 | 0.8999 | 0.8181 | 0.9523 |

| Experiment_115 fold_5 | 0.78125 | 0.7835 | 0.5882 | 0.8333 | 0.4545 | 0.9523 |

| Experiment_115 95% | 0.8500 | 0.8315 | 0.7778 | 0.8400 | 0.7241 | 0.9216 |

3.1.3 orginmutimodel

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_132 fold_1 | 0.78125 | 0.7041 | 0.6315 | 0.8571 | 0.5 | 0.9499 |

| Experiment_132 fold_2 | 0.78125 | 0.7083 | 0.6666 | 0.7777 | 0.5833 | 0.8999 |

| Experiment_132 fold_3 | 0.71875 | 0.6666 | 0.64 | 0.6153 | 0.6666 | 0.75 |

| Experiment_132 fold_4 | 0.8125 | 0.8095 | 0.75 | 0.6923 | 0.8181 | 0.8095 |

| Experiment_132 fold_5 | 0.6562 | 0.4155 | 0.0 | 0.0 | 0.0 | 1.0 |

| Experiment_132 |

3.2 hmc数据集

简单的先尝试,效果非常的糟糕

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_73 fold_1 | 0.84375 | 0.884057 | 0.888888 | 0.909090 | 0.869565 | 0.777777 |

| Experiment_73 fold_2 | 0.75 | 0.744588 | 0.826086 | 0.759999 | 0.904761 | 0.454545 |

| Experiment_73 fold_3 | 0.6875 | 0.647058 | 0.75 | 0.652174 | 0.882352 | 0.466666 |

| Experiment_73 fold_4 | 0.6875 | 0.536796 | 0.807692 | 0.677419 | 1.0 | 0.090909 |

| Experiment_73 fold_5 |

3.2.1 mamba+echoprime video

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_85 fold_1 | 0.90625 | 0.913043 | 0.936170 | 0.916666 | 0.956521 | 0.777777 |

| Experiment_85 fold_2 | 0.78125 | 0.787878 | 0.829268 | 0.85 | 0.8095 | 0.7272 |

| Experiment_85 fold_3 | 0.8125 | 0.8784 | 0.8235 | 0.8235 | 0.8235 | 0.8 |

| Experiment_85 fold_4 | 0.8125 | 0.8095 | 0.85 | 0.8947 | 0.8095 | 0.8182 |

| Experiment_85 fold_5 | 0.84375 | 0.878787 | 0.87179 | 0.9444 | 0.8095 | 0.9090 |

| Experiment_85 | 0.83125 |

损失改为tversky

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_86 fold_2 | 0.78125 | 0.813852 | 0.820512 | 0.888888 | 0.761904 | 0.818181 |

损失改为focal

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_87 fold_2 | 0.78125 | 0.8 | 0.8108 | 0.9375 | 0.714285 | 0.9090 |

损失改为dice

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_88 fold_2 | 0.78125 | 0.783549 | 0.820512 | 0.888888 | 0.761904 | 0.818181 |

损失改为bce_dice

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_89 fold_2 | 0.78125 | 0.805194 | 0.8205 | 0.8888 | 0.7619 | 0.818181 |

损失改为asymmetric

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_90 fold_1 | 0.90625 | 0.932367 | 0.936170 | 0.916666 | 0.956521 | 0.777777 |

| Experiment_90 fold_2 | 0.8125 | 0.8484 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_90 fold_3 | 0.84375 | 0.843137 | 0.864864 | 0.8 | 0.941176 | 0.733333 |

| Experiment_90 fold_4 | 0.8125 | 0.835497 | 0.85 | 0.894736 | 0.809523 | 0.818181 |

| Experiment_90 fold_5 | 0.84375 | 0.861471 | 0.883720 | 0.863636 | 0.904761 | 0.727272 |

| Experiment_90 | 0.84375 | 0.864175 | 0.888351 | 0.866628 | 0.891956 | 0.753332 |

改为交叉注意力融合

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_95 fold_1 | 0.84375 | 0.903381 | 0.883720 | 0.949999 | 0.826086 | 0.888888 |

| Experiment_95 fold_2 | 0.78125 | 0.779220 | 0.851063 | 0.769230 | 0.952380 | 0.454545 |

| Experiment_95 fold_3 | 0.8125 | |||||

| Experiment_95 fold_4 | 0.78125 | |||||

| Experiment_95 fold_5 | 0.8125 | 0.818181 | 0.857142 | 0.857142 | 0.857142 | 0.727272 |

| Experiment_95 | 拉跨 |

加权融合

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_96 fold_1 | 0.90625 | 0.937198 | 0.936170 | 0.916666 | 0.9565 | 0.7777 |

| Experiment_96 fold_2 | 0.8125 | 0.826839 | 0.857142 | 0.857142 | 0.857142 | 0.727272 |

| Experiment_96 fold_3 | 0.8125 | 0.882352 | 0.849999 | 0.739130 | 1.0 | 0.6 |

| Experiment_96 fold_4 | 0.8125 | 0.826839 | 0.85 | 0.894736 | 0.809523 | 0.818181 |

| Experiment_96 fold_5 | 0.84375 | 0.857142 | 0.883720 | 0.863636 | 0.904761 | 0.727272 |

| Experiment_96 |

Adaw优化器

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_97 fold_1 | 0.90625 | 0.927536 | 0.930232 | 1.0 | 0.869565 | 1.0 |

| Experiment_97 fold_2 | 0.78125 | 0.831168 | 0.810810 | 0.9375 | 0.714285 | 0.909090 |

| Experiment_97 fold_3 | 0.78125 | 0.882352 | 0.774193 | 0.857142 | 0.705882 | 0.866666 |

| Experiment_97 fold_4 | 0.8125 | 0.844155 | 0.842105 | 0.941176 | 0.761904 | 0.909090 |

| Experiment_97 fold_5 | 0.8125 | 0.883116 | 0.85 | 0.894736 | 0.809523 | 0.818181 |

| Experiment_97 |

SGD

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_98 fold_1 | 0.8125 | 0.816425 | 0.869565 | 0.869565 | 0.869565 | 0.666666 |

| Experiment_98 fold_2 | 0.65625 |

RMSprop

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_99 fold_1 | 0.90625 | 0.913043 | 0.930232 | 1.0 | 0.869565 | 1.0 |

| Experiment_99 fold_2 | 0.78125 | 0.822510 | 0.810810 | 0.9375 | 0.714285 | 0.909090 |

Adagrad

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_100 fold_1 | 0.78125 | |||||

| Experiment_100 fold_2 | 0.65625 |

还是改回MADGRAD优化器

做了点数据增强

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_101 fold_1 | 0.875 | 0.9565 | 0.916666 | 0.879999 | 0.956521 | 0.666666 |

| Experiment_101 fold_2 | 0.8125 | 0.7922 | 0.85 | 0.8947 | 0.8095 | 0.818181 |

| Experiment_101 fold_3 | 0.84375 | 0.886274 | 0.871794 | 0.772727 | 1.0 | 0.666666 |

| Experiment_101 fold_4 | 0.75 | |||||

| Experiment_101 fold_5 | 0.8125 | 0.8311 | 0.875 | 0.7777 | 1.0 | 0.4545 |

mamba: A2C切面的数据

echo_prime: A4C切面的数据

融合: 通道拼接

保留4*4*4的空间结构

损失还是asymmetric

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_92 fold_1 | 0.90625 | 0.971014 | 0.936170 | 0.916666 | 0.956521 | 0.7777 |

| Experiment_92 fold_2 | 0.84375 | 0.805194 | 0.888888 | 0.833333 | 0.952380 | 0.636363 |

| Experiment_92 fold_3 | 0.75 | 0.7960 | 0.7647 | 0.7647 | 0.7647 | 0.7333 |

| Experiment_92 fold_4 | 0.78125 | 0.826839 | 0.810810 | 0.9375 | 0.714285 | 0.909090 |

| Experiment_92 fold_5 | 0.84375 | 0.878787 | 0.878048 | 0.899999 | 0.857142 | 0.818181 |

| Experiment_92 | 0.825 | 效果拉跨 |

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构

损失还是asymmetric

但是使用的是dec0作为mamba的输出

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_93 fold_1 | 0.90625 | 0.942028 | 0.938775 | 0.884615 | 1.0 | 0.666666 |

| Experiment_93 fold_2 | 0.8125 | 0.792207 | 0.85 | 0.894736 | 0.809523 | 0.818181 |

| Experiment_93 fold_3 | 0.8125 | 0.870588 | 0.823529 | 0.823529 | 0.823529 | 0.8 |

| Experiment_93 fold_4 | 0.8125 | 0.805194 | 0.857142 | 0.857142 | 0.857142 | 0.727272 |

| Experiment_93 fold_5 | 0.84375 | 0.874458 | 0.878048 | 0.89999 | 0.857142 | 0.818181 |

| Experiment_93 | 0.8375 |

保留6*6*6的空间结构

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_93 fold_1 | 0.90625 | |||||

| Experiment_93 fold_3 | 0.75 |

mamba: A4C切面的数据

echo_prime: A2C切面的数据

融合: 通道拼接

保留4*4*4的空间结构

损失还是asymmetric

但是使用的是dec2作为mamba的输出

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_94 fold_1 | 0.90625 | 0.946859 | 0.936170 | 0.916666 | 0.956521 | 0.777777 |

| Experiment_94 fold_2 | 0.78125 | 0.796536 | 0.829268 | 0.85 | 0.809523 | 0.727272 |

| Experiment_94 fold_3 | 0.84375 | 0.925490 | 0.848484 | 0.875 | 0.823529 | 0.866666 |

| Experiment_94 fold_4 | 0.75 | 0.779220 | 0.809523 | 0.809523 | 0.809523 | 0.636363 |

| Experiment_94 fold_5 | 0.8125 | 0.861471 | 0.857142 | 0.857142 | 0.857142 | 0.727272 |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_95 fold_1 1e-8 | 0.90625 | 0.9565 | 0.938775 | 0.884615 | 1.0 | 0.666666 |

| Experiment_95 fold_3 1e-8 | 0.8125 | 0.921568 | 0.849999 | 0.739130 | 1.0 | 0.6 |

3.2.2 mamba+echoprime text video

加入知识向量

mamba: A4C切面的数据

echo_prime: A2C切面的数据

都加入了知识向量

融合: 通道拼接

保留4*4*4的空间结构

学习率1e-6 b8 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_116 fold_1 | 0.90625 | 0.8067 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_116 fold_2 | 0.78125 (0.81) | 0.818181 | 0.8108 | 0.9375 | 0.7142 | 0.9090 |

| Experiment_116 fold_3 | 0.8125 (0.84) | 0.8509 | 0.8 | 0.9230 | 0.7058 | 0.9333 |

| Experiment_116 fold_4 | 0.8125 | 0.8614 | 0.85 | 0.8947 | 0.8095 | 0.8181 |

| Experiment_116 fold_5 | 0.84375 | 0.8484 | 0.8837 | 0.8636 | 0.9047 | 0.7272 |

| Experiment_116 | 0.83125 |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_127 fold_1 | 0.90625 | 0.8792 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_127 fold_2 | 0.8125 | 0.9264 | 0.8333 | 1.0 | 0.7142 | 1.0 |

| Experiment_127 fold_3 | 0.8125 | 0.8666 | 0.8 | 0.9230 | 0.7058 | 0.9333 |

| Experiment_127 fold_4 | 0.75 | 0.8138 | 0.7894 | 0.8823 | 0.7142 | 0.8181 |

| Experiment_127 fold_5 | 0.8125 | 0.8225 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_127 |

学习率1e-6 b16 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_128 fold_1 | 0.875 | 0.9178 | 0.9166 | 0.8799 | 0.9565 | 0.6666 |

| Experiment_128 fold_2 | 0.75 | 0.7878 | 0.7894 | 0.8823 | 0.7142 | 0.8181 |

| Experiment_128 fold_3 | 0.84375 | 0.9098 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_128 fold_4 | 0.78125 | 0.8225 | 0.8108 | 0.9375 | 0.7142 | 0.9090 |

| Experiment_128 fold_5 | 0.84375 | 0.8658 | 0.8780 | 0.8999 | 0.8571 | 0.8181 |

| Experiment_128 | 0.81875 | 0.86072 | 0.84474 | 0.90562 | 0.78136 | 0.84722 |

学习率1e-6 b12 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_129 fold_1 | 0.90625 | 0.9371 | 0.9361 | 0.9166 | 0.9565 | 0.7777 |

| Experiment_129 fold_2 | 0.75 | 0.7922 | 0.7999 | 0.8421 | 0.7619 | 0.7272 |

| Experiment_129 fold_3 | 0.84375 | 0.8549 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_129 fold_4 | 0.75 | 0.7965 | 0.7894 | 0.8823 | 0.7142 | 0.8181 |

| Experiment_129 fold_5 | 0.84375 | 0.8614 | 0.8717 | 0.9444 | 0.8095 | 0.9090 |

| Experiment_129 |

学习率1e-6 b4 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_130 fold_1 | 0.90625 | 0.9516 | 0.9333 | 0.9545 | 0.9130 | 0.8888 |

| Experiment_130 fold_2 | 0.78125 | 0.7835 | 0.8372 | 0.8181 | 0.8571 | 0.6363 |

| Experiment_130 fold_3 | 0.84375 | 0.8784 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_130 fold_4 | 0.78125 | 0.8 | 0.8205 | 0.8888 | 0.7619 | 0.8181 |

| Experiment_130 fold_5 | 0.84375 | 0.8484 | 0.8717 | 0.9444 | 0.8095 | 0.9090 |

| Experiment_130 |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_131 fold_1 | 0.9375 | 0.9565 | 0.9545 | 1.0 | 0.9130 | 1.0 |

| Experiment_131 fold_2 | 0.8125 | 0.8701 | 0.85 | 0.8947 | 0.8095 | 0.8181 |

| Experiment_131 fold_3 | 0.875 | 0.9568 | 0.8947 | 0.8095 | 1.0 | 0.9333 |

| Experiment_131 fold_4 | 0.8125 | 0.8744 | 0.85 | 0.8947 | 0.8095 | 0.8181 |

| Experiment_131 fold_5 | 0.875 | 0.8961 | 0.8999 | 0.9473 | 0.8571 | 0.9090 |

| Experiment_131 | 0.8625 | 0.9108 | 0.8899 | 0.9093 | 0.8778 | 0.8558 |

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_131 fold_5 v1 | 0.84375 | 0.8831 | 0.8717 | 0.9444 | 0.8095 | 0.9090 |

| Experiment_131 fold_5 v2 | 0.84375 | 0.9047 | 0.8780 | 0.8999 | 0.8571 | 0.8181 |

使用dec2作为输出

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_126 fold_1 | 0.90625 | 0.8937 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_126 fold_2 | 0.8125 | 0.8571 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_126 fold_3 | 0.75 | 0.8117 | 0.75 | 0.8 | 0.7058 | 0.8 |

| Experiment_126 fold_4 | 0.6875 | 0.7662 | 0.75 | 0.7894 | 0.7142 | 0.6363 |

| Experiment_126 fold_5 | 0.84375 | 0.8354 | 0.8718 | 0.9444 | 0.8095 | 0.9090 |

| Experiment_126 |

学习率1e-5 b8 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_125 fold_1 | 0.875 | 0.8743 | 0.9166 | 0.8799 | 0.9565 | 0.6666 |

| Experiment_125 fold_2 | 0.75 | 0.8051 | 0.7777 | 0.9333 | 0.6666 | 0.9090 |

| Experiment_125 fold_3 | 0.78125 | 0.8705 | 0.7407 | 1.0 | 0.5882 | 1.0 |

| Experiment_125 fold_4 | 0.71875 | 0.7445 | 0.7567 | 0.875 | 0.6666 | 0.8181 |

| Experiment_125 fold_5 | 0.84375 | 0.8311 | 0.8837 | 0.8636 | 0.9047 | 0.7272 |

| Experiment_125 |

损失:asymmetric

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_117 fold_1 | 0.90625 | 0.8405 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_117 fold_2 | 0.75 | 0.7229 | 0.7777 | 0.9333 | 0.6666 | 0.9090 |

| Experiment_117 fold_3 | 0.8125 (0.84) | 0.8823 | 0.8421 | 0.7619 | 0.9411 | 0.6666 |

| Experiment_117 fold_4 | 0.8125 | 0.8528 | 0.8421 | 0.9411 | 0.7619 | 0.9090 |

| Experiment_117 fold_5 | 0.84375 | 0.8354 | 0.8837 | 0.8636 | 0.9047 | 0.7272 |

| Experiment_117 | 0.825 |

损失weighted_bce 2.0

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_118 fold_1 | 0.875 | 0.8937 | 0.9166 | 0.8799 | 0.9565 | 0.6666 |

| Experiment_118 fold_2 | 0.78125 | 0.818181 | 0.8205 | 0.8888 | 0.7619 | 0.8181 |

| Experiment_118 fold_3 | 0.8125 | 0.8431 | 0.8 | 0.9230 | 0.7058 | 0.9333 |

| Experiment_118 fold_4 | 0.75 | 0.8354 | 0.7894 | 0.8823 | 0.7142 | 0.8181 |

| Experiment_118 fold_5 | 0.84375 | 0.8441 | 0.8837 | 0.8636 | 0.9047 | 0.7272 |

| Experiment_118 | 0.8125 | 0.8469 | 0.8420 | 0.8875 | 0.7886 | 0.7931 |

损失weighted_bce 0.75

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_119 fold_1 | 0.90625 | 0.8550 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_119 fold_2 | 0.78125 | 0.8138 | 0.8108 | 0.9375 | 0.7142 | 0.9090 |

| Experiment_119 fold_3 | 0.84375 | 0.9098 | 0.8571 | 0.8333 | 0.8823 | 0.8 |

| Experiment_119 fold_4 | 0.8125 | 0.8528 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_119 fold_5 | 0.8125 | 0.8528 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_119 | 0,83125 |

损失weighted_bce 0.5

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_120 fold_1 | 0.84375 | 0.8550 | 0.8936 | 0.875 | 0.9130 | 0.6666 |

| Experiment_120 fold_2 | 0.75 | 0.8268 | 0.7777 | 0.9333 | 0.6666 | 0.9090 |

| Experiment_120 fold_3 | 0.875 | 0.9058 | 0.8888 | 0.8421 | 0.9411 | 0.8 |

| Experiment_120 fold_4 | 0.75 | 0.8398 | 0.7894 | 0.8823 | 0.7142 | 0.8181 |

| Experiment_120 fold_5 | 0.78125 | 0.8398 | 0.8292 | 0.85 | 0.8095 | 0.7272 |

| Experiment_120 | 0.8 |

使用的dec0作为mamba的输出

学习率1e-6 b8 损失:bce

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_121 fold_1 | 0.875 | 0.9227 | 0.9166 | 0.8799 | 0.9565 | 0.6666 |

| Experiment_121 fold_2 | 0.8125 | 0.8051 | 0.875 | 0.7777 | 1.0 | 0.4545 |

| Experiment_121 fold_3 | 0.875 | 0.8862 | 0.8823 | 0.8823 | 0.8823 | 0.8666 |

| Experiment_121 fold_4 | 0.6875 | 0.6839 | 0.7222 | 0.8666 | 0.6190 | 0.8181 |

| Experiment_121 fold_5 | 0.875 | 0.8917 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_121 | 0.825 |

使用的dec0作为mamba的输出

学习率1e-6 b8 损失:asymmetric

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_122 fold_4 | 0.6875 |

学习率:1e-4

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_123 fold_1 | 0.875 | 0.8550 | 0.9166 | 0.8799 | 0.9565 | 0.6666 |

| Experiment_123 fold_2 | 0.75 | |||||

| Experiment_123 fold_3 | 0.84375 | |||||

| Experiment_123 fold_4 | 0.71875 | 0.7532 | 0.7428 | 0.9285 | 0.6190 | 0.9090 |

| Experiment_123 fold_5 | 0.8125 | |||||

| Experiment_123 |

学习率1e-4 b8 损失:bce 少加几层知识向量

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_124 fold_1 | 0.90625 | 0.8599 | 0.9387 | 0.8846 | 1.0 | 0.6666 |

| Experiment_124 fold_2 | 0.78125 | 0.8 | 0.8205 | 0.8888 | 0.7619 | 0.8181 |

| Experiment_124 fold_3 | 0.84375 | 0.9568 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_124 fold_4 | 0.71875 | 0.7532 | 0.7428 | 0.9285 | 0.6190 | 0.9090 |

| Experiment_124 fold_5 | 0.8125 | 0.8311 | 0.8571 | 0.8571 | 0.8571 | 0.7272 |

| Experiment_124 | 0.8125 |

3.3 省医院数据

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_test fold_5 |

A2C A4C

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_test fold_1 | 0.75 | 0.7219 | 0.8108 | 0.75 | 0.8823 | 0.5454 |

| Experiment_provincial_test fold_2 | 0.75 | 0.8556 | 0.8205 | 0.7272 | 0.9411 | 0.4545 |

| Experiment_provincial_test fold_3 | 0.8214 | 0.8214 | 0.8484 | 0.7368 | 1.0 | 0.6428 |

| Experiment_provincial_test fold_4 | 0.6666 | 0.4671 | 0.7804 | 0.7272 | 0.8421 | 0.25 |

| Experiment_provincial_test fold_5 | 0.7407 | 0.6882 | 0.7878 | 0.8125 | 0.7647 | 0.6999 |

| Experiment_provincial_test | 0.74 |

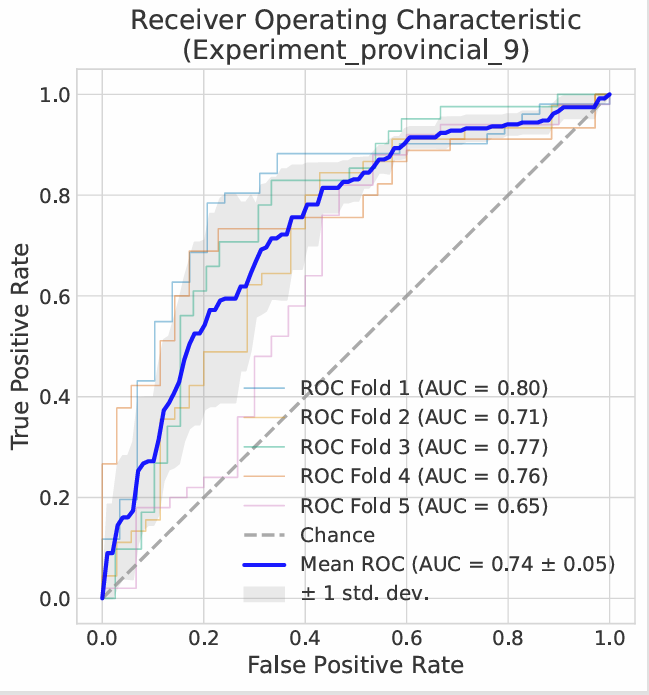

6切面的数据 mamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_1 fold_1 | 0.8571 | 0.8877 | 0.8823 | 0.8823 | 0.8823 | 0.8181 |

| Experiment_provincial_1 fold_2 | 0.8571 | 0.9197 | 0.875 | 0.9333 | 0.8235 | 0.9090 |

| Experiment_provincial_1 fold_3 | 0.8214 | 0.8775 | 0.8275 | 0.8 | 0.8571 | 0.7857 |

| Experiment_provincial_1 fold_4 | 0.7692 | 0.5486 | 0.8571 | 0.75 | 1.0 | 0.25 |

| Experiment_provincial_1 fold_5 | 0.7307 | 0.6405 | 0.7741 | 0.8571 | 0.7058 | 0.7777 |

| Experiment_provincial_1 | 0.8088 | 0.7881 | 0.8452 | 0.8353 | 0.8554 | 0.7358 |

6切面的数据 mamba 三分类

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_3 fold_1 | 0.75 | 0.6893 | 0.75 | 0.75 | 0.75 | |

| Experiment_provincial_3 fold_2 | 0.7142 | 0.8654 | 0.7142 | 0.7142 | 0.7142 | |

| Experiment_provincial_3 fold_3 | 0.75 | 0.8795 | 0.75 | 0.75 | 0.75 | |

| Experiment_provincial_3 fold_4 | 0.8076 | 0.6731 | 0.8076 | 0.8076 | 0.8076 | |

| Experiment_provincial_3 fold_5 | 0.7307 | 0.6515 | 0.7307 | 0.7307 | 0.7307 | |

| Experiment_provincial_3 | 0.7505 | 0.7518 | 0.7505 | 0.7505 | 0.7505 |

430例

| name | acc | auc | f1 | precision | recall |

|---|---|---|---|---|---|

| Experiment_provincial_15 fold_0 | 0.5909 | 0.6442 | 0.5909 | 0.5909 | 0.5909 |

| Experiment_provincial_15 fold_1 | 0.6351 | 0.5633 | 0.6351 | 0.6351 | 0.6351 |

| Experiment_provincial_15 fold_2 | 0.6279 | 0.4458 | 0.6279 | 0.6279 | 0.6279 |

| Experiment_provincial_15 fold_3 | 0.6304 | 0.4827 | 0.6304 | 0.6304 | 0.6304 |

| Experiment_provincial_15 fold_4 | 0.5595 | 0.5470 | 0.5595 | 0.5595 | 0.5595 |

| Experiment_provincial_15 |

| name | acc | auc | f1 | precision | recall |

|---|---|---|---|---|---|

| Experiment_provincial_16 fold_0 | 0.6022 | 0.6275 | 0.6022 | 0.6022 | 0.6022 |

| Experiment_provincial_16 fold_1 | 0.6351 | 0.5748 | 0.6351 | 0.6351 | 0.6351 |

| Experiment_provincial_16 fold_2 | 0.6395 | 0.5086 | 0.6395 | 0.6395 | 0.6395 |

| Experiment_provincial_16 fold_3 | 0.6413 | 0.4055 | 0.6413 | 0.6413 | 0.6413 |

| Experiment_provincial_16 fold_4 | 0.6071 | 0.6992 | 0.6071 | 0.6071 | 0.6071 |

| Experiment_provincial_16 | 0.6250 | 0.5631 | 0.6250 | 0.6250 | 0.6250 |

6切面的数据 echoprime 32F

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_2 fold_1 | 0.8125 | 0.9047 | 0.8571 | 0.75 | 1.0 | 0.5714 |

| Experiment_provincial_2 fold_2 | 0.8125 | 0.6727 | 0.8799 | 0.7857 | 1.0 | 0.4 |

| Experiment_provincial_2 fold_3 | 0.8125 | 0.8166 | 0.8 | 0.6666 | 1.0 | 0.6999 |

| Experiment_provincial_2 fold_4 | 0.8125 | 0.5 | 0.8888 | 0.8 | 1.0 | 0.25 |

| Experiment_provincial_2 fold_5 | 0.625 | 0.55 | 0.7692 | 0.625 | 1.0 | 0.0 |

| Experiment_provincial_2 |

加上12月的数据

六切面 16F

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_4 fold_1 | 0.75 | 0.7366 | 0.8346 | 0.7361 | 0.9636 | 0.3448 |

| Experiment_provincial_4 fold_2 | 0.7093 | 0.7020 | 0.7788 | 0.7097 | 0.8627 | 0.4857 |

| Experiment_provincial_4 fold_3 | 0.7262 | 0.7312 | 0.7473 | 0.7234 | 0.7727 | 0.6750 |

| Experiment_provincial_4 fold_4 | 0.6860 | 0.6577 | 0.7652 | 0.6769 | 0.8799 | 0.4166 |

| Experiment_provincial_4 fold_5 | 0.7143 | 0.6695 | 0.7931 | 0.7302 | 0.8679 | 0.4516 |

| Experiment_provincial_4 |

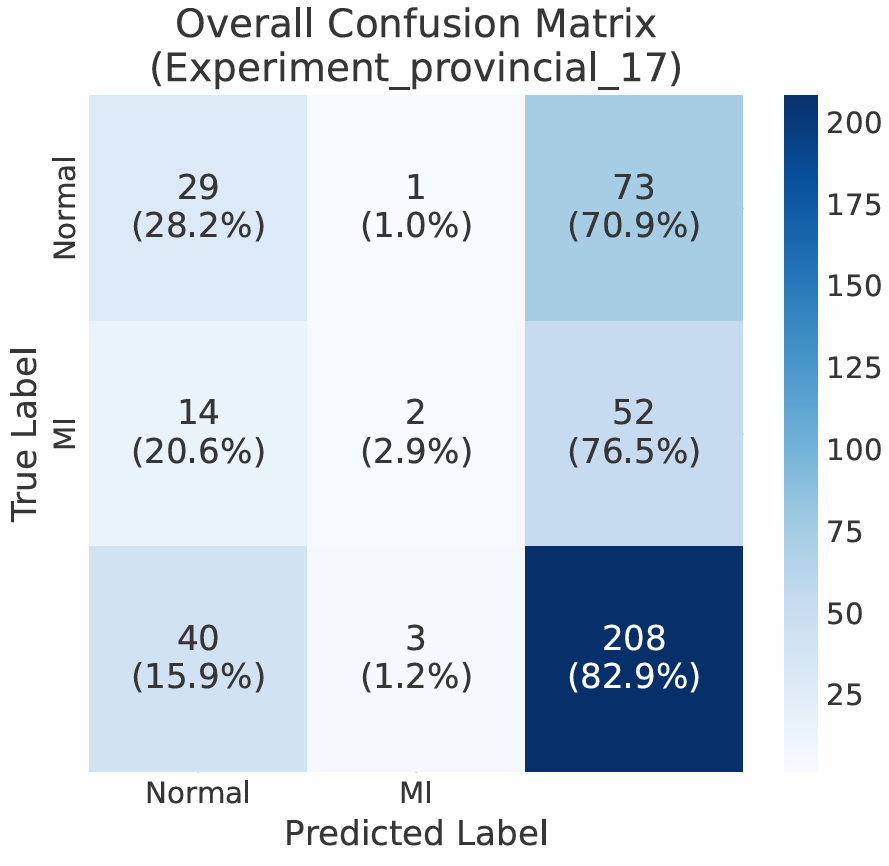

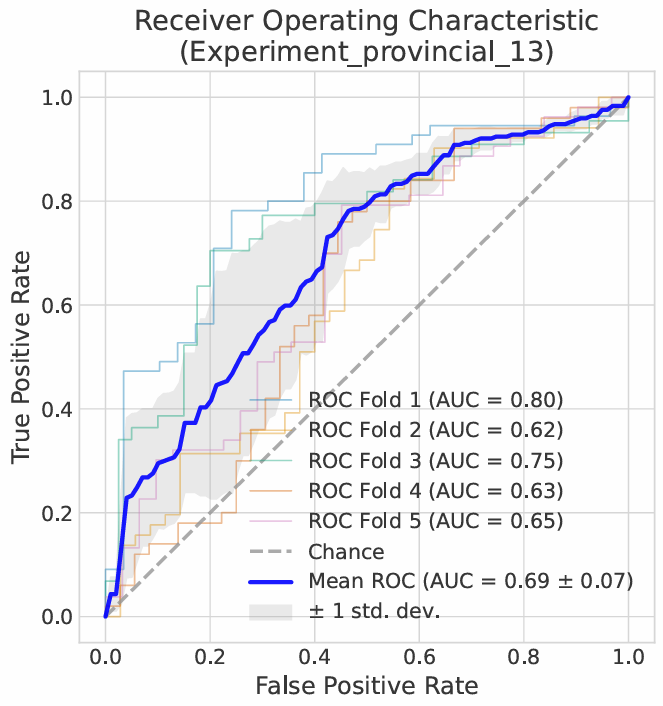

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_13 fold_1 | 0.7857 | 0.8012 | 0.8448 | 0.8032 | 0.8909 | 0.5862 |

| Experiment_provincial_13 fold_2 | 0.6744 | 0.6224 | 0.7666 | 0.6666 | 0.9020 | 0.3428 |

| Experiment_provincial_13 fold_3 | 0.75 | 0.7540 | 0.7470 | 0.7949 | 0.7045 | 0.8 |

| Experiment_provincial_13 fold_4 | 0.6860 | 0.6277 | 0.7768 | 0.6620 | 0.9399 | 0.3333 |

| Experiment_provincial_13 fold_5 | 0.6904 | 0.6524 | 0.7592 | 0.7454 | 0.7736 | 0.5484 |

| Experiment_provincial_13 | 0.7173 |

新分折

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_14 fold_0 | 0.6931 | 0.6963 | 0.7216 | 0.7609 | 0.6862 | 0.7027 |

| Experiment_provincial_14 fold_1 | 0.7567 | 0.7720 | 0.7954 | 0.7954 | 0.7954 | 0.6999 |

| Experiment_provincial_14 fold_2 | 0.7209 | 0.7020 | 0.7857 | 0.7586 | 0.8148 | 0.5625 |

| Experiment_provincial_14 fold_3 | 0.6739 | 0.7306 | 0.6875 | 0.8461 | 0.5789 | 0.8286 |

| Experiment_provincial_14 fold_4 | 0.5952 | 0.5399 | 0.6909 | 0.6129 | 0.7917 | 0.3333 |

| Experiment_provincial_14 | 0.6880 |

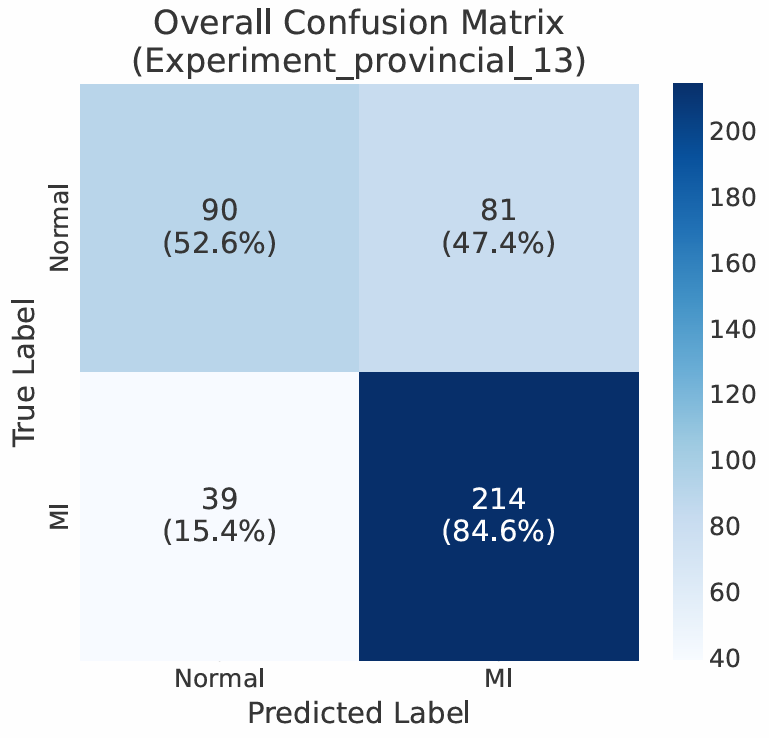

A2C + A4C

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

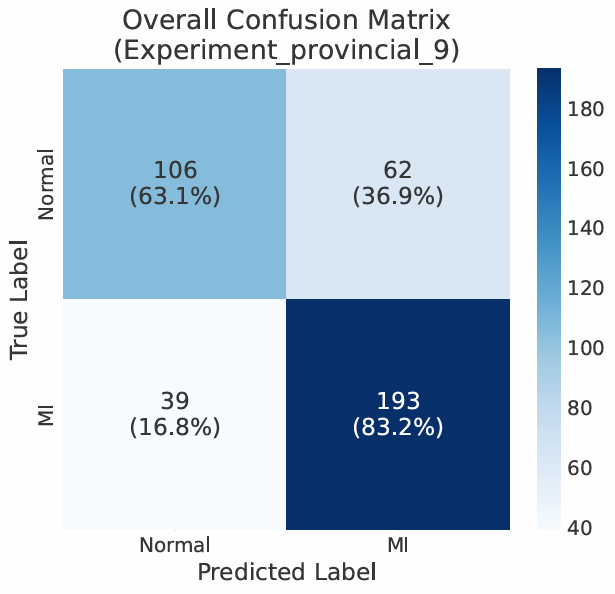

| Experiment_provincial_9 fold_1 | 0.8 | 0.8026 | 0.8491 | 0.8181 | 0.8824 | 0.6552 |

| Experiment_provincial_9 fold_2 | 0.7250 | 0.7104 | 0.7755 | 0.7169 | 0.8444 | 0.5714 |

| Experiment_provincial_9 fold_3 | 0.75 | 0.7654 | 0.7727 | 0.7234 | 0.8293 | 0.6666 |

| Experiment_provincial_9 fold_4 | 0.75 | 0.7644 | 0.7561 | 0.8378 | 0.6888 | 0.8286 |

| Experiment_provincial_9 fold_5 | 0.7125 | 0.6466 | 0.7965 | 0.7143 | 0.8999 | 0.4 |

| Experiment_provincial_9 | 0.7475 | 0.7379 | 0.79 | 0.7621 | 0.8290 | 0.6244 |

新分折

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_provincial_10 fold_0 | 0.6704 | |||||

| Experiment_provincial_10 fold_1 | 0.6527 | |||||

| Experiment_provincial_10 fold_2 | 0.725 | |||||

| Experiment_provincial_10 fold_3 | ||||||

| Experiment_provincial_10 fold_4 | ||||||

| Experiment_provincial_10 |

A2C + A3C + A4C

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

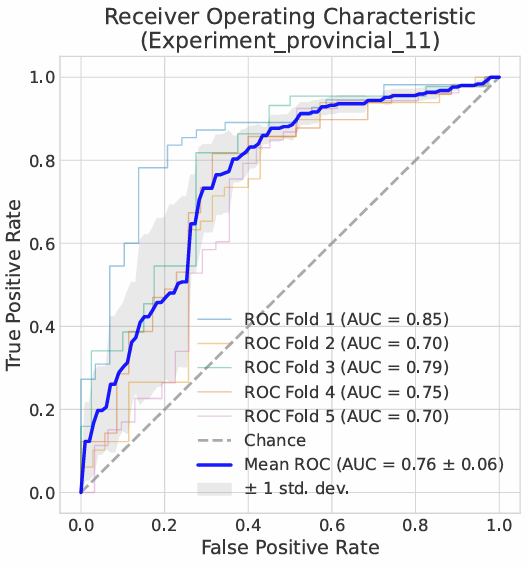

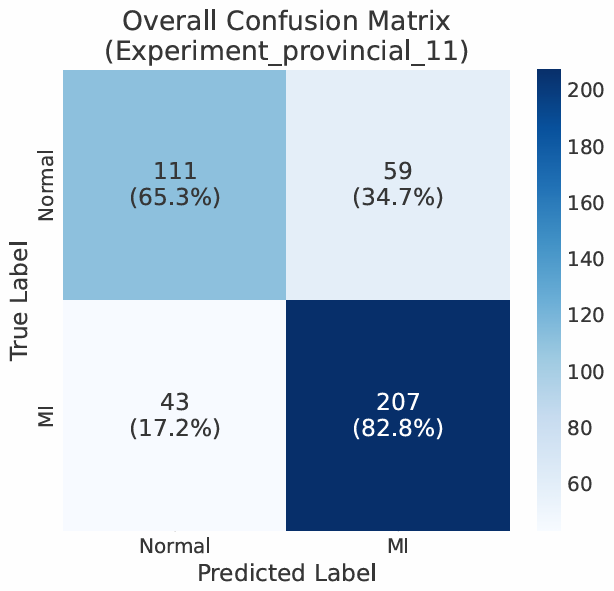

| Experiment_provincial_11 fold_1 | 0.8095 | 0.8508 | 0.8545 | 0.8545 | 0.8545 | 0.7241 |

| Experiment_provincial_11 fold_2 | 0.7381 | 0.7038 | 0.7924 | 0.7368 | 0.8571 | 0.5714 |

| Experiment_provincial_11 fold_3 | 0.75 | 0.7897 | 0.7640 | 0.7555 | 0.7727 | 0.725 |

| Experiment_provincial_11 fold_4 | 0.75 | 0.7481 | 0.7921 | 0.7692 | 0.8163 | 0.6571 |

| Experiment_provincial_11 fold_5 | 0.7381 | 0.6962 | 0.8 | 0.7719 | 0.8301 | 0.5806 |

| Experiment_provincial_11 | 0.7571 | 0.7577 | 0.8006 | 0.7776 | 0.8262 | 0.6517 |

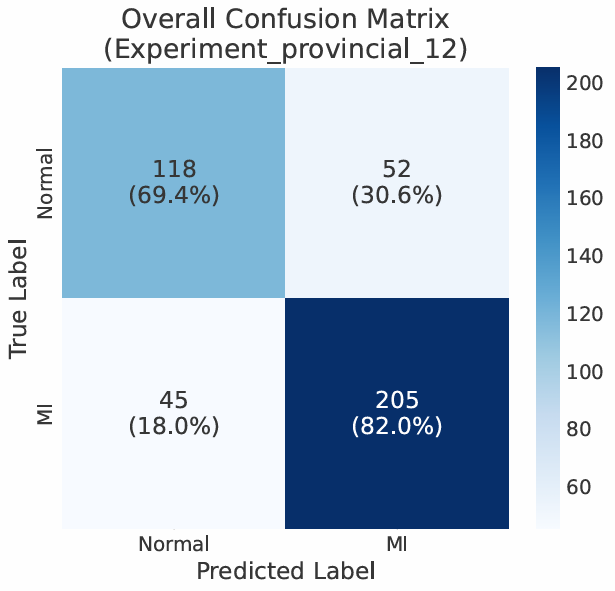

AP SAX + MV SAX + PM SAX

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

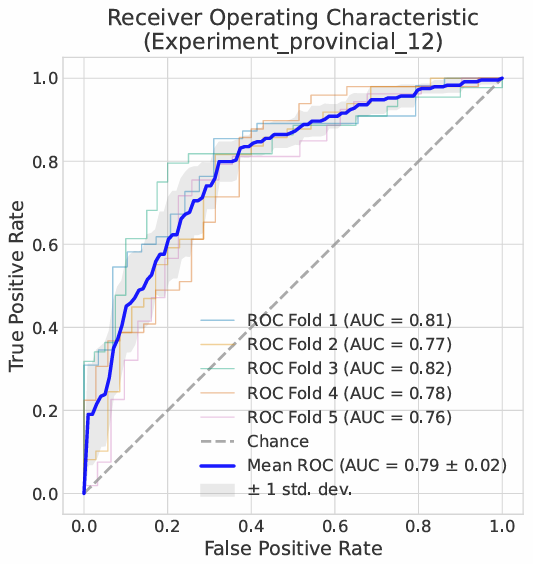

| Experiment_provincial_12 fold_1 | 0.7976 | 0.8132 | 0.8468 | 0.8393 | 0.8545 | 0.6896 |

| Experiment_provincial_12 fold_2 | 0.7619 | 0.7738 | 0.8 | 0.7843 | 0.8163 | 0.6857 |

| Experiment_provincial_12 fold_3 | 0.7857 | 0.8159 | 0.7857 | 0.8250 | 0.75 | 0.8250 |

| Experiment_provincial_12 fold_4 | 0.7619 | 0.7796 | 0.8113 | 0.7544 | 0.8775 | 0.60 |

| Experiment_provincial_12 fold_5 | 0.7381 | 0.7553 | 0.7924 | 0.7924 | 0.7924 | 0.6451 |

| Experiment_provincial_12 | 0.7690 | 0.7876 | 0.8073 | 0.7991 | 0.8182 | 0.6891 |

4. 模型结构图

5. sota实验

5.1 camus

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| BI-Mamba | 78.75 | 73.45 | 64.87 | 85.01 | 54.85 | / |

| CARL | 83.75 | 60.33 | 73.42 | 85.56 | 67.42 | / |

| MV-Swin-T | 62.50 | 57.14 | 60.42 | 61.65 | 62.17 | / |

| CTT-Net | 62.50 | 70.04 | 70.70 | 62.96 | 82.62 | / |

| MIMamba | 85.00 | 83.15 | 77.78 | 84.00 | 72.41 | 92.16 |

5.2 hmc

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| SAF-Net | 78.13 | \ | 81.57 | 88.26 | 77.64 | / |

| BI-Mamba | 77.50 | 73.07 | 83.85 | 78.12 | 91.59 | / |

| CARL | 85.62 | 45.56 | 87.30 | 86.43 | 93.13 | / |

| MV-Swin-T | 75.63 | 80.62 | 76.82 | 70.63 | 73.83 | / |

| CTT-Net | 72.50 | 78.39 | 71.38 | 73.54 | 71.00 | / |

| LVSnake | 83.09 | \ | 87.17 | 86.49 | 90.11 | / |

| MIMamba | 86.25 | 91.08 | 88.99 | 90.93 | 87.78 | 85.58 |

6. 单切面对比实验

6.1 camus

汇总

| view | name | acc | auc | f1 | precision | recall |

|---|---|---|---|---|---|---|

| A4C | logvmamba | 0.7625 | 0.7069 | 0.5456 | 0.8605 | 0.4575 |

| lkm_unet | 0.7875 | 0.7537 | 0.5917 | 0.8466 | 0.5424 | |

| ukan3d | 0.7125 | 0.7374 | 0.6782 | 0.5837 | 0.8257 | |

| dk-mamba | 0.8063 | 0.7838 | 0.6882 | 0.7962 | 0.6181 | |

| A2C | logvmamba | 0.7375 | 0.6521 | 0.5873 | 0.7558 | 0.5161 |

| lkm_unet | 0.7250 | 0.7202 | 0.6595 | 0.6790 | 0.7454 | |

| ukan3d | 0.7063 | 0.7040 | 0.6351 | 0.5832 | 0.7060 | |

| dk-mamba | 0.7750 | 0.7790 | 0.6834 | 0.6880 | 0.6848 |

A4C切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| logvmamba | 0.7625 | 0.7069 | 0.5456 | 0.8605 | 0.4575 | 0.9299 |

| lkm_unet | 0.7875 | 0.7537 | 0.5917 | 0.8466 | 0.5424 | 0.9184 |

| ukan3d | 0.7125 | 0.7374 | 0.6782 | 0.5837 | 0.8257 | 0.6495 |

| dk-mamba | 0.8063 | 0.7838 | 0.6882 | 0.7962 | 0.6181 | 0.9118 |

dk-mamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_138 fold_1 | 0.78125 | 0.6999 | 0.6666 | 0.7777 | 0.5833 | 0.8999 |

| Experiment_138 fold_2 | 0.78125 | 0.75 | 0.6315 | 0.8571 | 0.5 | 0.9499 |

| Experiment_138 fold_3 | 0.90625 | 0.925 | 0.8799 | 0.8461 | 0.9166 | 0.8999 |

| Experiment_138 fold_4 | 0.78125 | 0.8311 | 0.6315 | 0.75 | 0.5454 | 0.9047 |

| Experiment_138 fold_5 | 0.78125 | 0.7142 | 0.6315 | 0.75 | 0.5454 | 0.9047 |

| Experiment_138 | 0.8063 | 0.7838 | 0.6882 | 0.7962 | 0.6181 | 0.9118 |

logvmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_133 fold_1 | 0.75 | 0.7250 | 0.6363 | 0.6999 | 0.5833 | 0.85 |

| Experiment_133 fold_2 | 0.75 | 0.5833 | 0.5555 | 0.8333 | 0.4166 | 0.9499 |

| Experiment_133 fold_3 | 0.84375 | 0.8542 | 0.8 | 0.7692 | 0.8333 | 0.85 |

| Experiment_133 fold_4 | 0.75 | 0.6883 | 0.4285 | 1.0 | 0.2727 | 1.0 |

| Experiment_133 fold_5 | 0.71875 | 0.6839 | 0.3076 | 1.0 | 0.1818 | 1.0 |

| Experiment_133 | 0.7625 | 0.7069 | 0.5456 | 0.8605 | 0.4575 | 0.9299 |

lkm_unet

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_135 fold_1 | 0.875 | 0.8833 | 0.8333 | 0.8333 | 0.8333 | 0.8999 |

| Experiment_135 fold_2 | 0.75 | 0.7624 | 0.5555 | 0.8333 | 0.4166 | 0.9499 |

| Experiment_135 fold_3 | 0.84375 | 0.85 | 0.8148 | 0.7333 | 0.9166 | 0.8 |

| Experiment_135 fold_4 | 0.78125 | 0.7273 | 0.5882 | 0.8333 | 0.4545 | 0.9523 |

| Experiment_135 fold_5 | 0.6875 | 0.5454 | 0.1666 | 1.0 | 0.0909 | 1.0 |

| Experiment_135 | 0.7875 | 0.7537 | 0.5917 | 0.8466 | 0.5424 | 0.9184 |

ukan3d

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_136 fold_1 | 0.75 | 0.8167 | 0.7333 | 0.6111 | 0.9167 | 0.6499 |

| Experiment_136 fold_2 | 0.7187 | 0.6833 | 0.64 | 0.6153 | 0.6666 | 0.75 |

| Experiment_136 fold_3 | 0.875 | 0.8708 | 0.8571 | 0.75 | 1.0 | 0.8 |

| Experiment_136 fold_4 | 0.6875 | 0.7532 | 0.6153 | 0.5333 | 0.7272 | 0.6666 |

| Experiment_136 fold_5 | 0.53125 | 0.5628 | 0.5454 | 0.4090 | 0.8182 | 0.3809 |

| Experiment_136 | 0.7125 | 0.7374 | 0.6782 | 0.5837 | 0.8257 | 0.6495 |

segformer

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_137 fold_1 | ||||||

| Experiment_137 fold_2 | 0.6875 | 0.7291 | 0.6666 | 0.5555 | 0.8333 | 0.6 |

| Experiment_137 fold_3 | ||||||

| Experiment_137 fold_4 | ||||||

| Experiment_137 fold_5 | ||||||

| Experiment_137 |

A2C切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| logvmamba | 0.7375 | 0.6521 | 0.5873 | 0.7558 | 0.5161 | 0.8629 |

| lkm_unet | 0.7250 | 0.7202 | 0.6595 | 0.6790 | 0.7454 | 0.7195 |

| ukan3d | 0.7063 | 0.7040 | 0.6351 | 0.5832 | 0.7060 | 0.7071 |

| dk-mamba | 0.7750 | 0.7790 | 0.6834 | 0.6880 | 0.6848 | 0.8233 |

dk-mamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_141 fold_1 | 0.875 | 0.85 | 0.8462 | 0.7857 | 0.9166 | 0.85 |

| Experiment_141 fold_2 | 0.8125 | 0.8042 | 0.75 | 0.75 | 0.75 | 0.85 |

| Experiment_141 fold_3 | 0.71875 | 0.7125 | 0.64 | 0.6153 | 0.6666 | 0.75 |

| Experiment_141 fold_4 | 0.875 | 0.8268 | 0.8 | 0.8888 | 0.7273 | 0.9523 |

| Experiment_141 fold_5 | 0.59375 | 0.7013 | 0.3809 | 0.4 | 0.3636 | 0.7142 |

| Experiment_141 | 0.7750 | 0.7790 | 0.6834 | 0.6880 | 0.6848 | 0.8233 |

logvmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_134 fold_1 | 0.6875 | 0.7292 | 0.5 | 0.625 | 0.4166 | 0.85 |

| Experiment_134 fold_2 | 0.78125 | 0.6083 | 0.5882 | 1.0 | 0.4166 | 1.0 |

| Experiment_134 fold_3 | 0.71875 | 0.6375 | 0.64 | 0.6154 | 0.6666 | 0.75 |

| Experiment_134 fold_4 | 0.8125 | 0.5974 | 0.625 | 1.0 | 0.4545 | 1.0 |

| Experiment_134 fold_5 | 0.6875 | 0.6883 | 0.5833 | 0.5385 | 0.6363 | 0.7143 |

| Experiment_134 | 0.7375 | 0.6521 | 0.5873 | 0.7558 | 0.5161 | 0.8629 |

lkm_unet

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_139 fold_1 | 0.8125 | 0.7791 | 0.7272 | 0.8 | 0.6666 | 0.8999 |

| Experiment_139 fold_2 | 0.75 | 0.7166 | 0.7333 | 0.6111 | 0.9166 | 0.6499 |

| Experiment_139 fold_3 | 0.78125 | 0.7458 | 0.5882 | 1.0 | 0.4166 | 1.0 |

| Experiment_139 fold_4 | 0.59375 | 0.7186 | 0.6060 | 0.4545 | 0.9090 | 0.4285 |

| Experiment_139 fold_5 | 0.6875 | 0.6407 | 0.6428 | 0.5294 | 0.8181 | 0.6190 |

| Experiment_139 | 0.7250 | 0.7202 | 0.6595 | 0.6790 | 0.7454 | 0.7195 |

ukan3d

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_140 fold_1 | 0.71875 | 0.6833 | 0.64 | 0.6154 | 0.6666 | 0.75 |

| Experiment_140 fold_2 | 0.75 | 0.7583 | 0.7142 | 0.625 | 0.8333 | 0.6999 |

| Experiment_140 fold_3 | 0.75 | 0.7625 | 0.6666 | 0.6666 | 0.6666 | 0.8 |

| Experiment_140 fold_4 | 0.625 | 0.6883 | 0.5714 | 0.4705 | 0.7272 | 0.5714 |

| Experiment_140 fold_5 | 0.6875 | 0.6277 | 0.5833 | 0.5384 | 0.6363 | 0.7143 |

| Experiment_140 | 0.7063 | 0.7040 | 0.6351 | 0.5832 | 0.7060 | 0.7071 |

6.2 hmc

汇总

| view | name | acc | auc | f1 | precision | recall |

|---|---|---|---|---|---|---|

| A4C | logvmamba | 0.7438 | 0.6974 | 0.8070 | 0.7626 | 0.8652 |

| lkm_unet | 0.7875 | 0.7640 | 0.8520 | 0.7642 | 0.9669 | |

| ukan3d | 0.7938 | 0.7951 | 0.8569 | 0.7723 | 0.9692 | |

| dkmamba | 0.8313 | 0.8728 | 0.8625 | 0.8899 | 0.8395 | |

| A2C | logvmamba | 0.75 | 0.69148 | 0.81084 | 0.77048 | 0.86518 |

| lkm_unet | 0.75 | 0.7635 | 0.8220 | 0.7507 | 0.9114 | |

| ukan3d | 0.7563 | 0.7538 | 0.8346 | 0.75222 | 0.9540 | |

| dkmamba | 0.825 | 0.8574 | 0.8534 | 0.8534 | 0.8039 |

A4C切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| logvmamba | 0.7438 | 0.6974 | 0.8070 | 0.7626 | 0.8652 | 0.5175 |

| lkm_unet | 0.7875 | 0.7640 | 0.8520 | 0.7642 | 0.9669 | 0.4589 |

| ukan3d | 0.7938 | 0.7951 | 0.8569 | 0.7723 | 0.9692 | 0.4771 |

| dkmamba | 0.8313 | 0.8728 | 0.8625 | 0.8899 | 0.8395 | 0.7967 |

dkmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_147 fold_1 | 0.90625 | 0.9468 | 0.9362 | 0.9167 | 0.9565 | 0.7777 |

| Experiment_147 fold_2 | 0.84375 | 0.9134 | 0.8780 | 0.8999 | 0.8571 | 0.8181 |

| Experiment_147 fold_3 | 0.84375 | 0.9020 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_147 fold_4 | 0.75 | 0.7705 | 0.8095 | 0.8095 | 0.8095 | 0.6363 |

| Experiment_147 fold_5 | 0.8125 | 0.8311 | 0.85 | 0.8947 | 0.8095 | 0.8181 |

| Experiment_147 | 0.8313 | 0.8728 | 0.8625 | 0.8899 | 0.8395 | 0.7967 |

logvmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_148 fold_1 | 0.8125 | 0.9082 | 0.8695 | 0.8695 | 0.8695 | 0.6666 |

| Experiment_148 fold_2 | 0.875 | 0.8311 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_148 fold_3 | 0.5625 | 0.4666 | 0.6111 | 0.5789 | 0.6470 | 0.4666 |

| Experiment_148 fold_4 | 0.75 | 0.6320 | 0.8260 | 0.7599 | 0.9047 | 0.4545 |

| Experiment_148 fold_5 | 0.71875 | 0.6493 | 0.8235 | 0.6999 | 1.0 | 0.1818 |

| Experiment_148 | 0.7438 | 0.6974 | 0.8070 | 0.7626 | 0.8652 | 0.5175 |

lkm_unet

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_149 fold_1 | 0.875 | 0.8309 | 0.92 | 0.8518 | 1.0 | 0.5555 |

| Experiment_149 fold_2 | 0.875 | 0.8268 | 0.9130 | 0.8399 | 1.0 | 0.6363 |

| Experiment_149 fold_3 | 0.6875 | 0.7294 | 0.75 | 0.6521 | 0.8823 | 0.4666 |

| Experiment_149 fold_4 | 0.6875 | 0.6017 | 0.8077 | 0.6774 | 1.0 | 0.0909 |

| Experiment_149 fold_5 | 0.8125 | 0.8311 | 0.8695 | 0.8 | 0.9523 | 0.5454 |

| Experiment_149 | 0.7875 | 0.7640 | 0.8520 | 0.7642 | 0.9669 | 0.4589 |

ukan3d

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_146 fold_1 | 0.875 | 0.9565 | 0.92 | 0.8518 | 1.0 | 0.5555 |

| Experiment_146 fold_2 | 0.875 | 0.8398 | 0.9130 | 0.8399 | 1.0 | 0.6363 |

| Experiment_146 fold_3 | 0.71875 | 0.7333 | 0.7804 | 0.6666 | 0.9411 | 0.4666 |

| Experiment_146 fold_4 | 0.6875 | 0.5974 | 0.8077 | 0.6774 | 1.0 | 0.0909 |

| Experiment_146 fold_5 | 0.8125 | 0.8484 | 0.8636 | 0.8260 | 0.9047 | 0.6363 |

| Experiment_146 | 0.7938 | 0.7951 | 0.8569 | 0.7723 | 0.9692 | 0.4771 |

A2C切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| logvmamba | 0.75 | 0.69148 | 0.81084 | 0.77048 | 0.86518 | 0.53974 |

| lkm_unet | 0.75 | 0.7635 | 0.8220 | 0.7507 | 0.9114 | 0.4598 |

| ukan3d | 0.7563 | 0.7538 | 0.8346 | 0.75222 | 0.9540 | 0.4311 |

| dkmamba | 0.825 | 0.8574 | 0.8534 | 0.8534 | 0.8039 | 0.8553 |

dkmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_145 fold_1 | 0.84375 | 0.8792 | 0.8837 | 0.9499 | 0.8261 | 0.8888 |

| Experiment_145 fold_2 | 0.78125 | 0.8009 | 0.8108 | 0.9375 | 0.7143 | 0.9090 |

| Experiment_145 fold_3 | 0.84375 | 0.9058 | 0.8387 | 0.9285 | 0.7647 | 0.9333 |

| Experiment_145 fold_4 | 0.78125 | 0.8052 | 0.8292 | 0.85 | 0.8095 | 0.7272 |

| Experiment_145 fold_5 | 0.875 | 0.8961 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_145 | 0.825 | 0.8574 | 0.8534 | 0.8534 | 0.8039 | 0.8553 |

logvmamba

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_142 fold_1 | 0.84375 | 0.9082 | 0.8888 | 0.9090 | 0.8695 | 0.7777 |

| Experiment_142 fold_2 | 0.875 | 0.8311 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_142 fold_3 | 0.5625 | 0.4627 | 0.6111 | 0.5789 | 0.6470 | 0.4666 |

| Experiment_142 fold_4 | 0.75 | 0.6277 | 0.8261 | 0.7599 | 0.9047 | 0.4545 |

| Experiment_142 fold_5 | 0.71875 | 0.6277 | 0.8235 | 0.6999 | 1.0 | 0.1818 |

| Experiment_142 | 0.75 | 0.69148 | 0.81084 | 0.77048 | 0.86518 | 0.53974 |

lkm_unet

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_143 fold_1 | 0.78125 | 0.7874 | 0.8571 | 0.8076 | 0.9130 | 0.4444 |

| Experiment_143 fold_2 | 0.78125 | 0.7748 | 0.8444 | 0.7916 | 0.9047 | 0.5454 |

| Experiment_143 fold_3 | 0.65625 | 0.6235 | 0.7317 | 0.625 | 0.8823 | 0.4 |

| Experiment_143 fold_4 | 0.78125 | 0.8181 | 0.8510 | 0.7692 | 0.9523 | 0.4545 |

| Experiment_143 fold_5 | 0.75 | 0.8138 | 0.8260 | 0.7599 | 0.9047 | 0.4545 |

| Experiment_143 | 0.75 | 0.7635 | 0.8220 | 0.7507 | 0.9114 | 0.4598 |

ukan3d

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_144 fold_1 | 0.90625 | 0.8695 | 0.9333 | 0.9545 | 0.9130 | 0.8888 |

| Experiment_144 fold_2 | 0.78125 | 0.7922 | 0.8510 | 0.7692 | 0.9523 | 0.4545 |

| Experiment_144 fold_3 | 0.65625 | 0.7176 | 0.7555 | 0.6071 | 1.0 | 0.2666 |

| Experiment_144 fold_4 | 0.6875 | 0.5670 | 0.7999 | 0.6896 | 0.9523 | 0.1818 |

| Experiment_144 fold_5 | 0.75 | 0.8225 | 0.8333 | 0.7407 | 0.9523 | 0.3636 |

| Experiment_144 | 0.7563 | 0.7538 | 0.8346 | 0.75222 | 0.9540 | 0.4311 |

7. 消融实验

7.1 camus

语义校准 二腔切面补充

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| -语义校准 -二腔切面 | 80.00 | 76.98 | 66.13 | 83.45 | 58.87 | 91.14 |

| +语义校准 -二腔切面 | 83.13 | 85.91 | 75.99 | 77.93 | 75.90 | 87.23 |

| -语义校准 +二腔切面 | 81.88 | 83.04 | 73.23 | 76.83 | 70.6 | 88.19 |

| +语义校准 +二腔切面 | 85.00 | 83.15 | 77.78 | 84.00 | 72.41 | 92.16 |

-语义校准 -二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_150 fold_1 | 0.875 | 0.8458 | 0.8333 | 0.8333 | 0.8333 | 0.8999 |

| Experiment_150 fold_2 | 0.71875 | 0.7666 | 0.4 | 1.0 | 0.25 | 1.0 |

| Experiment_150 fold_3 | 0.8125 | 0.8083 | 0.7692 | 0.7142 | 0.8333 | 0.8 |

| Experiment_150 fold_4 | 0.875 | 0.8095 | 0.7777 | 1.0 | 0.6363 | 1.0 |

| Experiment_150 fold_5 | 0.71875 | 0.6190 | 0.5263 | 0.625 | 0.4545 | 0.8571 |

| Experiment_150 | 0.8 | 0.7698 | 0.6613 | 0.8345 | 0.5887 | 0.9114 |

+语义校准 -二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_151 fold_1 | 0.90625 | 0.9666 | 0.8695 | 0.9090 | 0.8333 | 0.9499 |

| Experiment_151 fold_2 | 0.75 | 0.7375 | 0.6 | 0.75 | 0.5 | 0.8999 |

| Experiment_151 fold_3 | 0.8125 | 0.8208 | 0.7857 | 0.6875 | 0.9166 | 0.75 |

| Experiment_151 fold_4 | 0.84375 | 0.8398 | 0.7826 | 0.75 | 0.8181 | 0.8571 |

| Experiment_151 fold_5 | 0.84375 | 0.9307 | 0.7619 | 0.8 | 0.7272 | 0.9047 |

| Experiment_151 | 0.83125 | 0.85908 | 0.75994 | 0.77930 | 0.75904 | 0.87232 |

-语义校准 +二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_152 fold_1 | 0.90625 | 0.9 | 0.8799 | 0.8461 | 0.9166 | 0.8999 |

| Experiment_152 fold_2 | 0.75 | 0.7625 | 0.6363 | 0.6999 | 0.5833 | 0.85 |

| Experiment_152 fold_3 | 0.78125 | 0.7666 | 0.6956 | 0.7272 | 0.6666 | 0.85 |

| Experiment_152 fold_4 | 0.875 | 0.8658 | 0.8181 | 0.8181 | 0.8181 | 0.9047 |

| Experiment_152 fold_5 | 0.78125 | 0.8571 | 0.6315 | 0.75 | 0.5454 | 0.9047 |

| Experiment_152 | 0.81875 | 0.8304 | 0.73228 | 0.76826 | 0.706 | 0.88186 |

7.2 hmc

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| -语义校准 -二腔切面 | 81.25 | 80.17 | 85.32 | 84.98 | 86.83 | 73.41 |

| +语义校准 -二腔切面 | 85.62 | 87.37 | 88.52 | 90.44 | 87.20 | 81.98 |

| -语义校准 +二腔切面 | 82.50 | 86.54 | 84.51 | 94.60 | 77.08 | 90.51 |

| +语义校准 +二腔切面 | 86.25 | 91.08 | 88.99 | 90.93 | 87.78 | 85.58 |

-语义校准 -二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_153 fold_1 | 0.90625 | 0.9468 | 0.9333 | 0.9545 | 0.9130 | 0.8888 |

| Experiment_153 fold_2 | 0.84375 | 0.7878 | 0.8837 | 0.8636 | 0.9047 | 0.7272 |

| Experiment_153 fold_3 | 0.8125 | 0.8196 | 0.8499 | 0.7391 | 1.0 | 0.60 |

| Experiment_153 fold_4 | 0.75 | 0.7229 | 0.8095 | 0.8095 | 0.8095 | 0.6363 |

| Experiment_153 fold_5 | 0.75 | 0.7316 | 0.7895 | 0.8824 | 0.7142 | 0.8181 |

| Experiment_153 | 0.8125 | 0.80174 | 0.85318 | 0.84982 | 0.86828 | 0.73408 |

+语义校准 -二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_154 fold_1 | 0.84375 | 0.8502 | 0.8888 | 0.9090 | 0.8695 | 0.7777 |

| Experiment_154 fold_2 | 0.84375 | 0.9004 | 0.8888 | 0.8333 | 0.9523 | 0.6363 |

| Experiment_154 fold_3 | 0.84375 | 0.8470 | 0.8484 | 0.875 | 0.8235 | 0.8666 |

| Experiment_154 fold_4 | 0.875 | 0.9177 | 0.8947 | 1.0 | 0.8095 | 1.0 |

| Experiment_154 fold_5 | 0.875 | 0.8528 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_154 | 0.8562 | 0.8737 | 0.8852 | 0.9044 | 0.8720 | 0.8198 |

-语义校准 +二腔切面

| name | acc | auc | f1 | precision | recall | specificity |

|---|---|---|---|---|---|---|

| Experiment_155 fold_1 | 0.84375 | 0.8985 | 0.8837 | 0.9499 | 0.8260 | 0.8888 |

| Experiment_155 fold_2 | 0.71875 | 0.7792 | 0.7567 | 0.875 | 0.6666 | 0.8181 |

| Experiment_155 fold_3 | 0.8125 | 0.8745 | 0.7857 | 1.0 | 0.6470 | 1.0 |

| Experiment_155 fold_4 | 0.875 | 0.9177 | 0.8947 | 1.0 | 0.8095 | 1.0 |

| Experiment_155 fold_5 | 0.875 | 0.8571 | 0.9047 | 0.9047 | 0.9047 | 0.8181 |

| Experiment_155 | 0.8250 | 0.8654 | 0.8451 | 0.9460 | 0.7708 | 0.9051 |

8. 关于写作

8.1 创新点1

利用文本对记忆的多层特征进行校准Mamba 选择性记忆与语义门控的“分层协同精调”

KnowledgeFusion 作用在多个尺度上。

- 表述逻辑: Mamba 负责在时空维度上“决定记录什么”,而文本向量负责在语义维度上“决定纠正什么”。

- 创新描述: 模型将 Mamba 的**长程选择性扫描能力(Long-range Selective Scan)与多级语义门控融合(Gated Fusion)**相结合。Mamba 编码器在底层利用 算法自动捕捉视频中的解剖结构运动,而 Decoder 阶段通过注入文本知识向量,对 Mamba 提取的抽象状态进行“语义校准”。

- 机制深度: 这种“先扫描、后校准”的策略,利用了文本向量作为语义锚点,对 Mamba 可能遗漏的关键病灶特征(如特定的室壁运动异常)进行增强,对扫描过程中的成像伪影进行抑制

8.2 创新点2

多视图 双路径时空特征与临床语义的“高阶关联增强”

- 表述逻辑: 融合了“经过 Mamba 压缩的时空特征”和“经过文本精调的视觉特征”。

- 创新描述: 不同于简单的单流网络,本模型构建了双路径学习框架。路径 A 利用 Mamba 的选择性状态空间有效地压缩了高维度的 A4C 视频序列;路径 B 利用 EchoPrime 编码器实现了文本引导的 A2C 特征精调。

- 学术意义: 通过

ClsMLP实现两者的非线性融合,模型实际上是在执行一种临床决策仿真:既参考了 Mamba 捕捉到的动态物理运动(Spatio-temporal selection),又参考了临床文本定义的解剖学逻辑(Semantic refinement),显著提升了二分类任务的稳健性。

Unlike previous Transformer-based methods that suffer from quadratic complexity, our model leverages the Selective State Space (Mamba) to efficiently capture dynamic cardiac motions. The core innovation lies in a Text-Guided Refinement strategy: we utilize cross-modal similarity to activate relevant clinical priors, which subsequently act as semantic governors to refine the selective memory states of the Mamba backbone at multiple scales.